Whether you’re using a smartphone, laptop, or robot vacuum cleaner, any electronic device has a file system. Without it, websites won’t work, your photos and videos with your pets won’t be available, and so on. Today, we’ll try to briefly understand the basic terms and test the selection of a cluster for disk fragmentation.

Content

What are File Systems?

First, you need to understand one fact. When you use Windows Explorer, File Manager, or Finder — it’s just the tip of the iceberg. Most people don’t see the invisible «gears» of these applications. What’s more, without file systems, OSes would simply not exist, because paradoxically, they are also made up of files.

File system (File System) — is a way of organizing data used by the operating system to store information in the form of files on various storage media (HDD/SSD, floppy disks, flash drives, etc.). It should be understood that this technology does not consist of a single term, but is a collection of other important functions. That’s why there are many additional concepts that should be revealed by our «protagonist».

Structure of the File System

Sectors, clusters (often called in blocks) — are the fundamental units of data storage and are key to data storage efficiency and compatibility with different operating systems. These are the areas where our data is stored. We’ll talk about this in more detail in the section below.

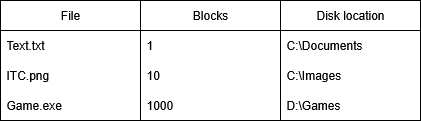

To track the location of files within clusters, we use a method called Indexation. It is necessary to physically memorize the location of a file on a disk and then quickly find, open, edit, or delete it. A real-life example: a library with a reference book that lists the cabinet, shelf, and corresponding location of the book. Usually, file systems use tables that store various information about a file. In exFAT, it is called the File Allocation Table, while in NTFS it is called the Master File Table. Over time, more complex B-Tree/ B+Tree structures have emerged that provide better support for media with large storage capacities.

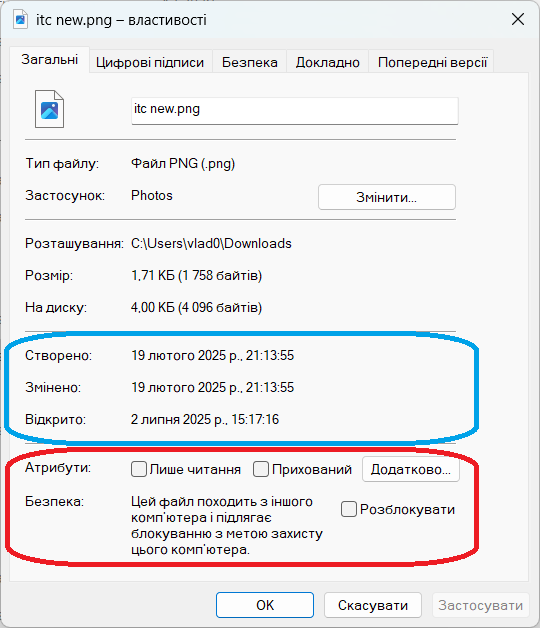

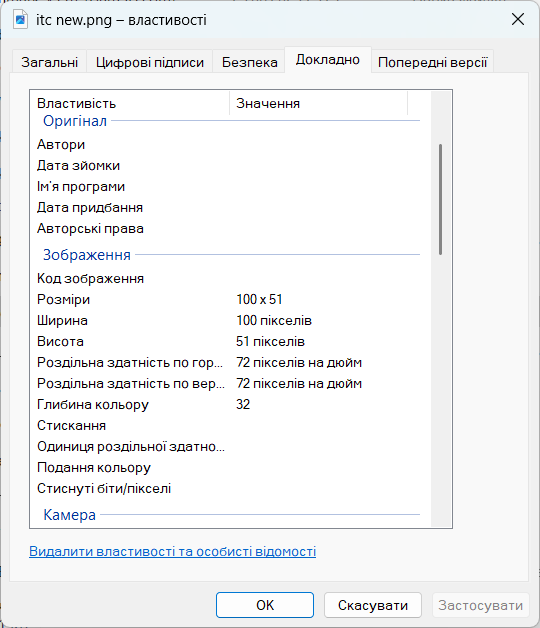

On a quiet working day, a user opens Explorer and sees the sorted files in front of them. So, for each file in the system, additional information is kept track of (Meta-data) date and time of creation, access rights, file size, etc. For example, for music, you can additionally enter the author, song title, album, bitrate, etc. To better structure your files, you can’t do without Catalog system. All our files will be stored in directories (folders), which will also have their own meta-data.

Ensuring Security

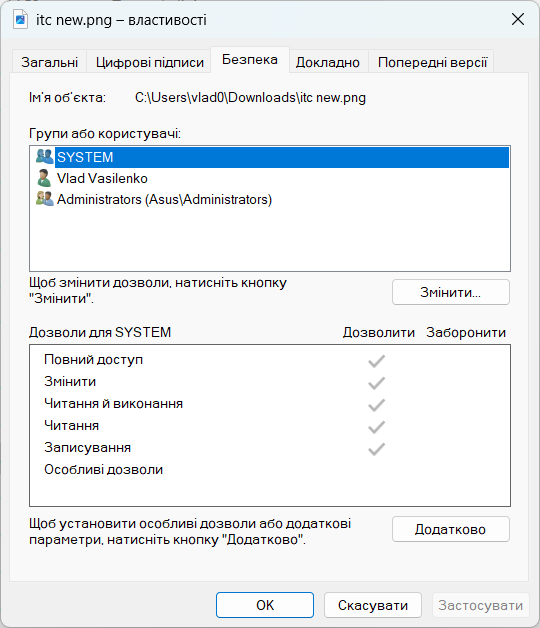

As we’ve already mentioned, metadata stores file access rights thanks to the mechanism Access control. Create, read, view, delete are common properties of files and folders. Therefore, it is necessary to clearly indicate which user is allowed to perform such actions: local user, Administrator, or a specific application (antivirus). It is often necessary to protect important system data from accidental deletion, audit and access logging.

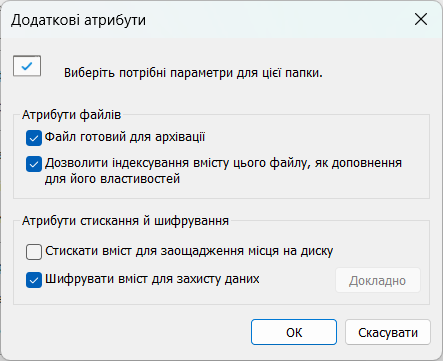

To ensure the maximum level of secrecy or non-disclosure of personal information, file systems support Data encryption. The necessary files, folders or even disks are encoded, and then further decoding is performed using special «keys».

Increased productivity and stability

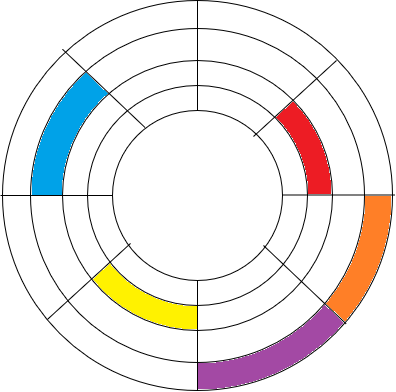

File sizes do not always match clusters in file systems. This is especially true for relatively large video files, which can take up more than one gigabyte. Therefore, a mechanism was developed to Fragmentation when data is divided into smaller parts. For obvious reasons, these small data are stored inconsistently on the disk: one file is deleted, replaced with a larger one, and some of it occupies the previous clusters, while the rest is somewhere in the new one.

After frequent or too long disk usage, the files can become so fragmented that the overall system speed drops. This was especially critical for HDDs. To further counteract this, we invented Defragmentation — the process of merging and organizing file fragments on a disk.

Here you can add the TRIM function, thanks to which the OS informs the SSD about busy or free sectors in the file system.

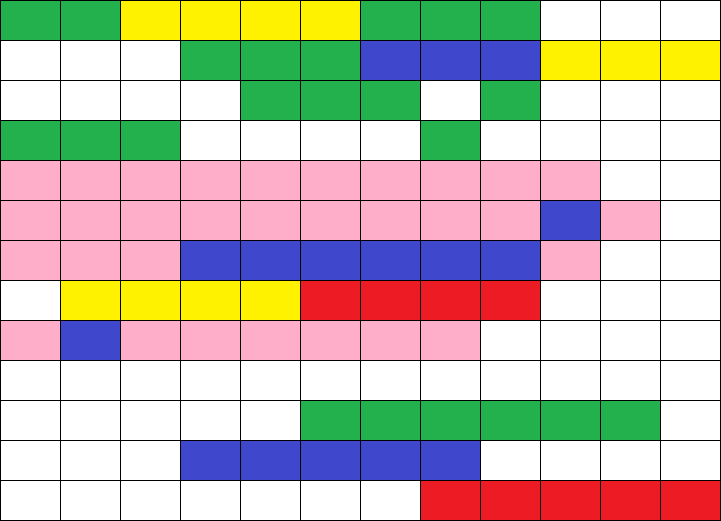

This is what a fragmented disk looks like in FS defragmentation applications

This is what a fragmented disk looks like in FS defragmentation applications

Another well-known method for increasing the speed of work is Data caching. It allows you to record and save used files, folders, meta-data, etc. for quick reuse.

An interesting mechanism is the Copy-on-Write (CoW) — is a method of managing shared resources. If several programs open the same data, each of them does not need to create its own copy of the «parent» file. But when one of the applications changes something in the file, it is guaranteed that a separate copy will be created for it.

There is only a certain problem with CoW. Since it creates a separate file for editing in each application, the number of such files increases. Therefore, data fragmentation increases.

Types of file systems

In general, there are several basic file systems for ordinary computer and smartphone users. They are: APFS, exFAT, NTFS, ext4, and F2FS.

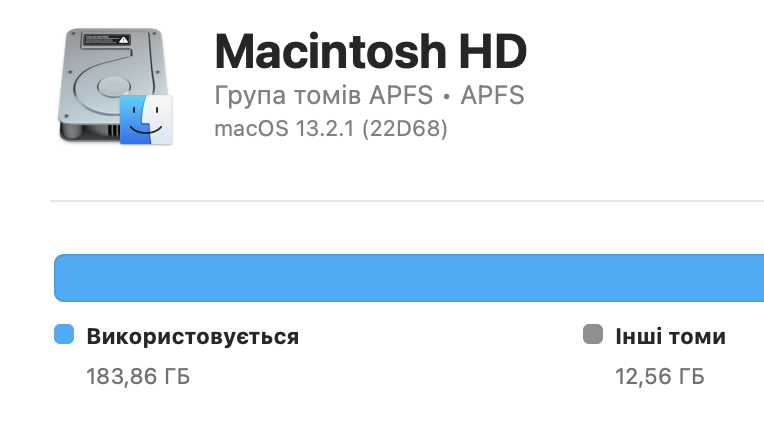

As you might guess, the Apple brand always likes something different. And this is no exception APFS or Apple File System. It was introduced in 2017 and is used in all current operating systems and devices: iOS, macOS, tvOS, and watchOS.

exFAT (Extended File Allocation Table) was introduced in 2006 as a replacement for the outdated FAT32, which is known to older users for not supporting files larger than 4 GB. Nowadays, exFAT is used as a file system for portable storage devices (memory cards, external disks, USB flash drives) because it is supported by most operating systems without any problems.

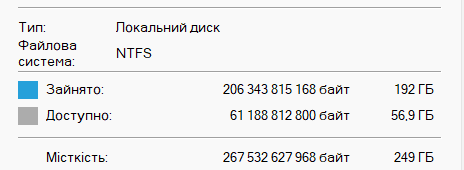

NTFS (New Technology File System) was developed by Microsoft to replace FAT32 for its Windows operating system back in 1993. The full transition to it took many years, more precisely, until the release of Windows XP in 2001. It is still used to create and operate system disks in the latest Windows 11.

In devices running the Linux operating system (for example, in ArchLinux) the file system is usually set to ext4 (Fourth Extended File System). It was introduced in 2006 to replace ext3. It is also used in Android OS. Google also uses F2FS (Flash-Friendly File System) developed by Samsung in 2012. The reason for its creation is better support for NAND drives and optimization for faster read/write speeds.

Fundamental units of data storage

Let’s move from theoretical to more practical terms that can be seen on any computer without much searching Sector is called the smallest physical (hardware) is a unit of data storage on your HDD/SSD. The sector size will depend on the recording devices themselves: from 512 bytes to 4096 bytes (4 KB) in modern systems.

A colleague of the sector is Cluster (Block) — is the smallest logical unit in the file system to allocate space for data files. A cluster usually combines several sectors into one (8 sectors of 512 bytes equals 1 cluster of 4096 bytes), but they can also be the same size. The following are Directories (Folders), Volumes (Disk). These are also logical units. The C disk on which Windows runs is a good visual example of this structure.

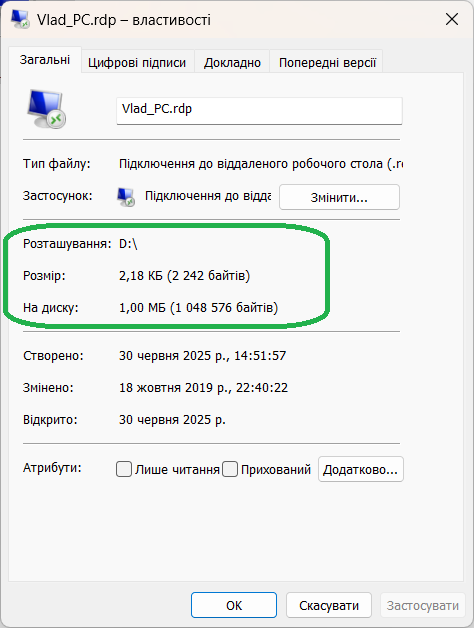

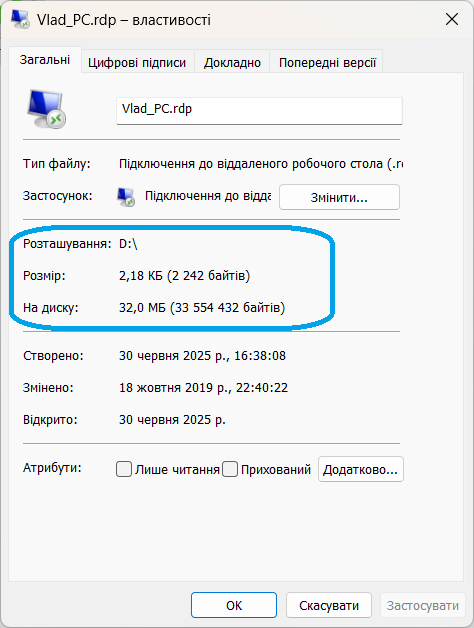

The peculiarity of both data storage units is that they use the entire volume for a small file. In the screenshots below, you can see a simple example where the RDP file weighs 3 KB. But on the same disk with a 4 KB cluster, it’s 1 KB larger. I don’t really want to give up 25% of the memory. But with a 32 MB cluster (32768 KB), 99.99% of the potential space for another file is lost.

The same file on different clusters (1024 or 32768 KB) takes up different amounts of disk space.

It was logical to think that you should choose the smallest cluster and format the disk. The other side of the coin is revealed by the term Internal fragmentation. For example, you need to save a 50 MB (51200 KB) song. The clusters are 4 KB each. As a result, we get 51200/4 = 12,800 clusters to use. Now you turn on the song and hear that it is slightly «to». The reason for this is a huge table that stores the transition data in search of each fragment. Therefore, smaller clusters require more file storage tables.

Tests for the best cluster selection

When formatting disks using the standard Windows tool, users are given the option of choosing a name, file system type, and cluster size. Windows or the disk manufacturer sets the last two parameters by default. But what advantages does a potential buyer get if they want to choose the cluster size themselves? Let’s try to find out.

The usual Formatting window. To open it, right-click on the disk in Explorer (not to the disk C), select Format.

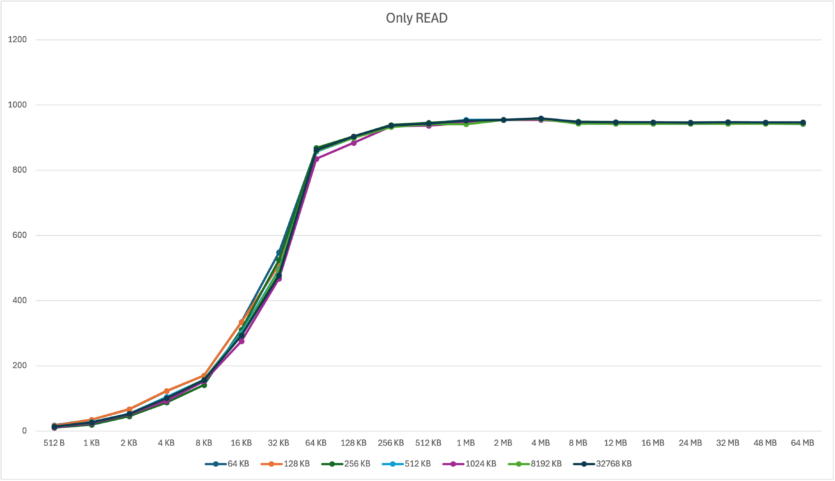

Let’s take the exFAT file system, which is the most compatible among others and allows you to use the disk on most user devices: Windows, Linux, MacOS, Android, or iOS/iPadOS. Let’s take the following cluster sizes: 64 KB, 128 KB, 256 KB, 512 KB, 1024 KB (1 MB), 8192 KB (8 MB), and 32768 KB (32 MB). We should say right away that the last two values are better not to use, not because of speed, but because of the large amount of memory allocated to potential small files. This was discussed in the previous section.

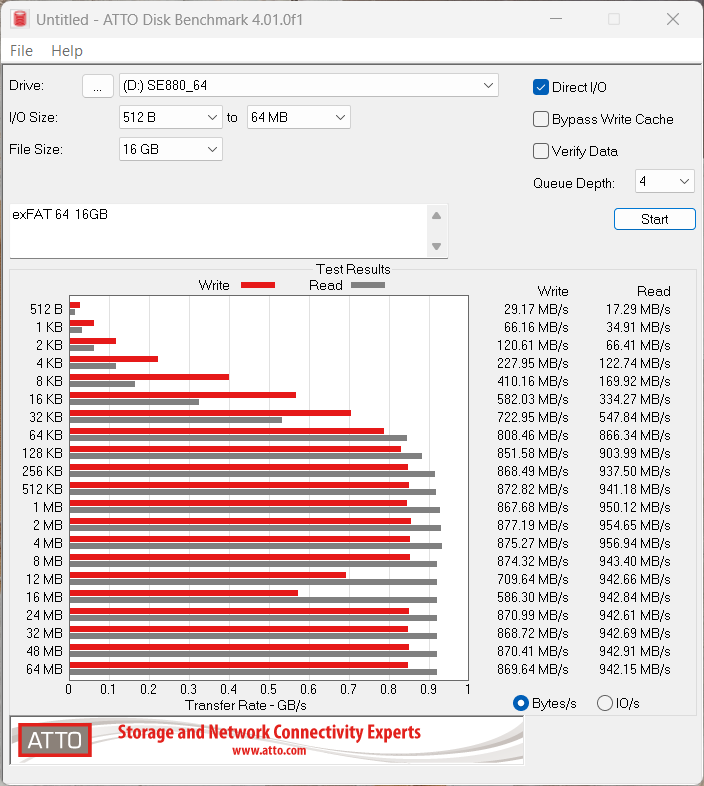

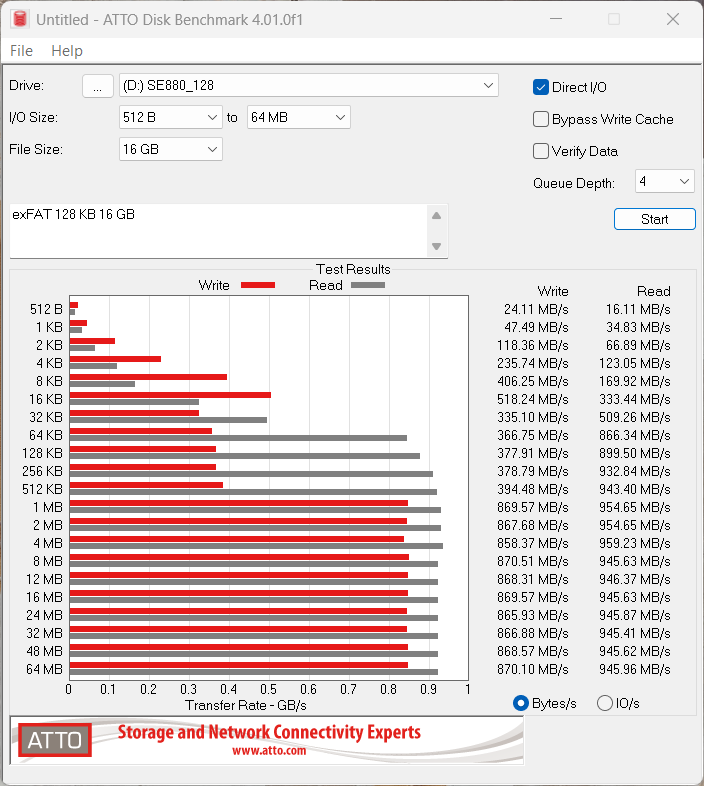

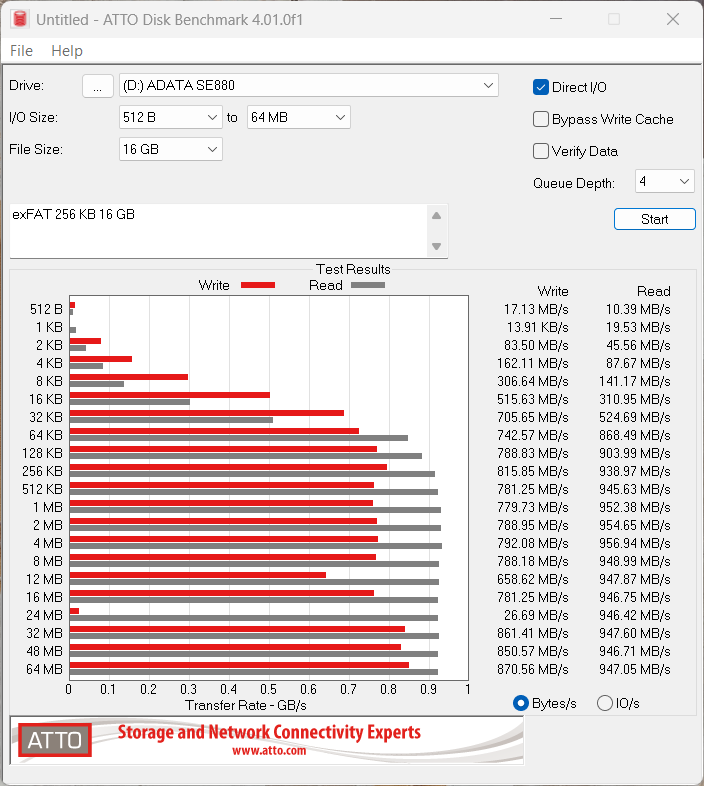

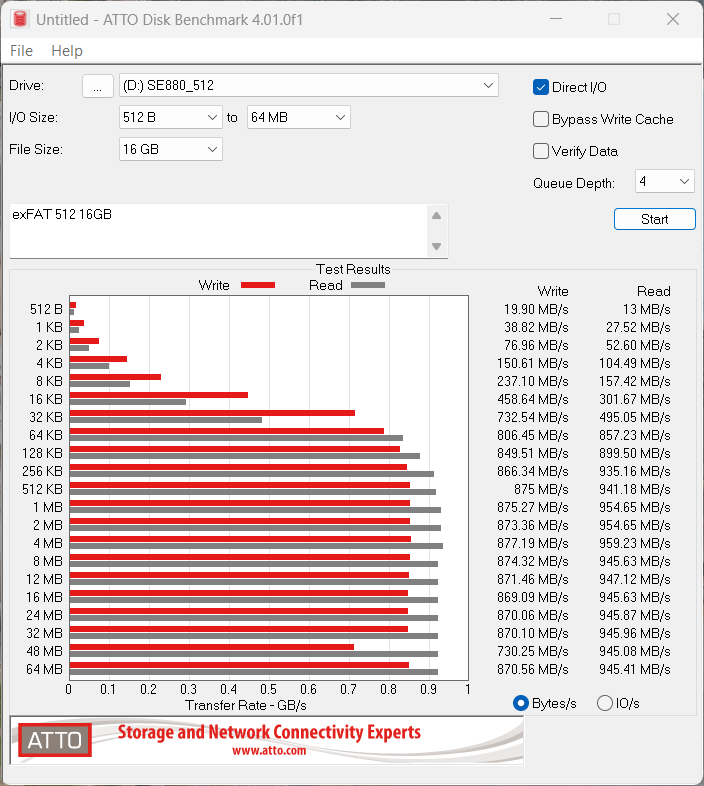

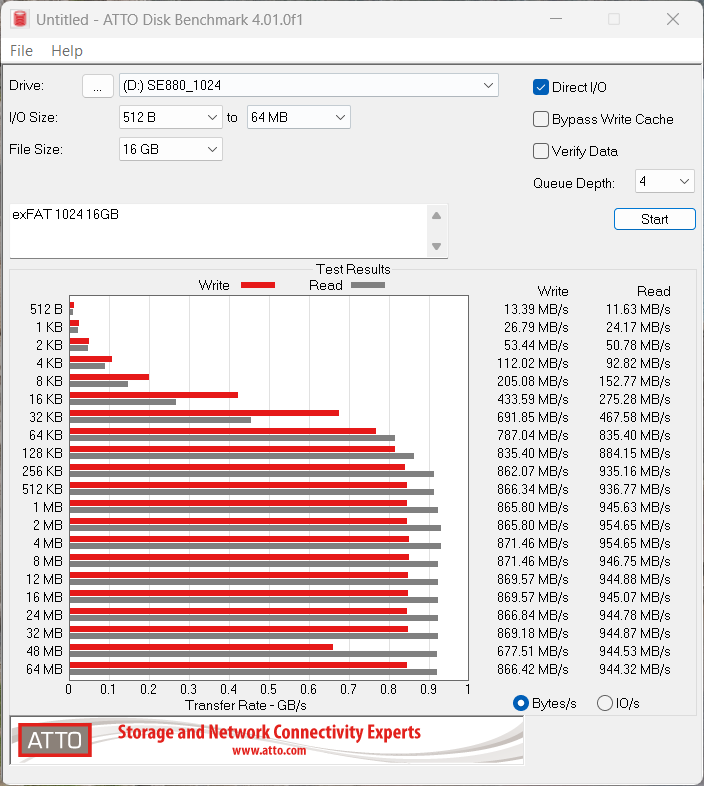

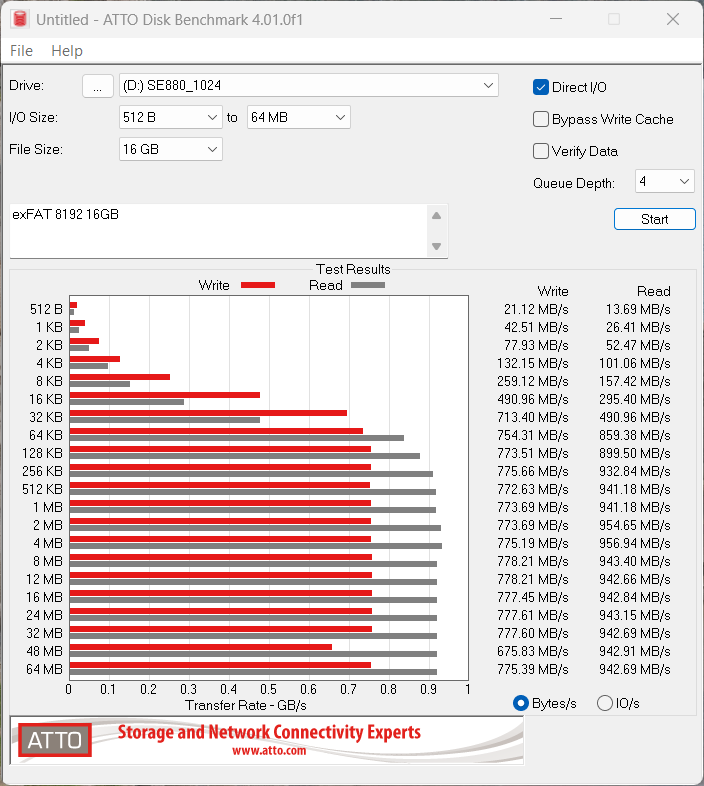

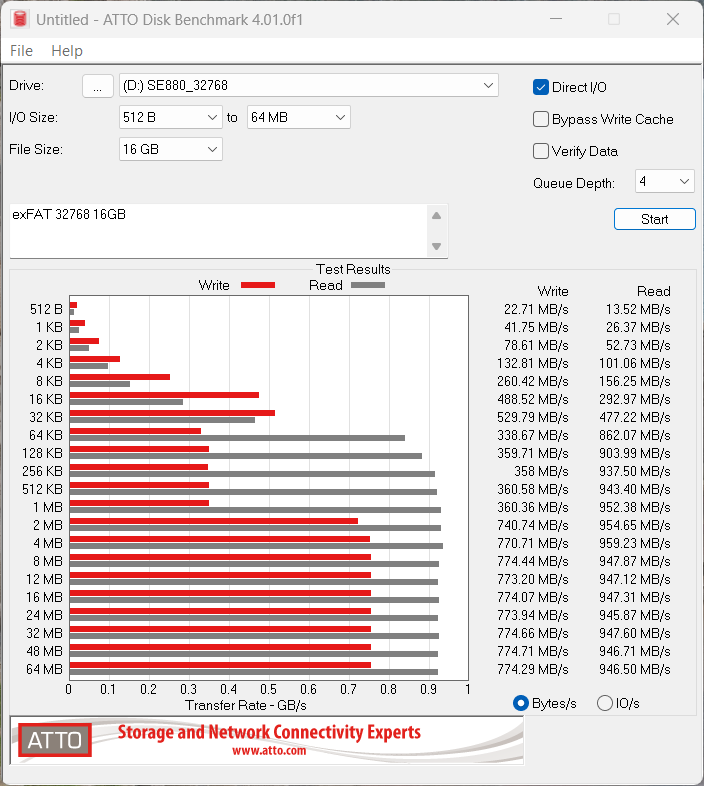

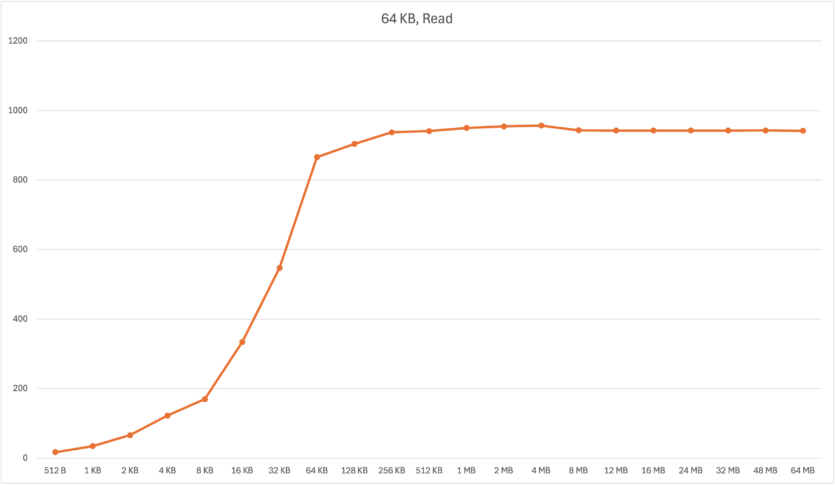

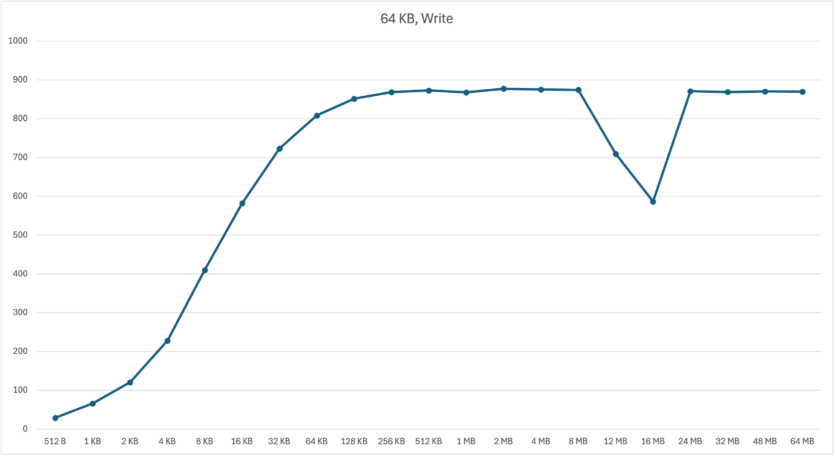

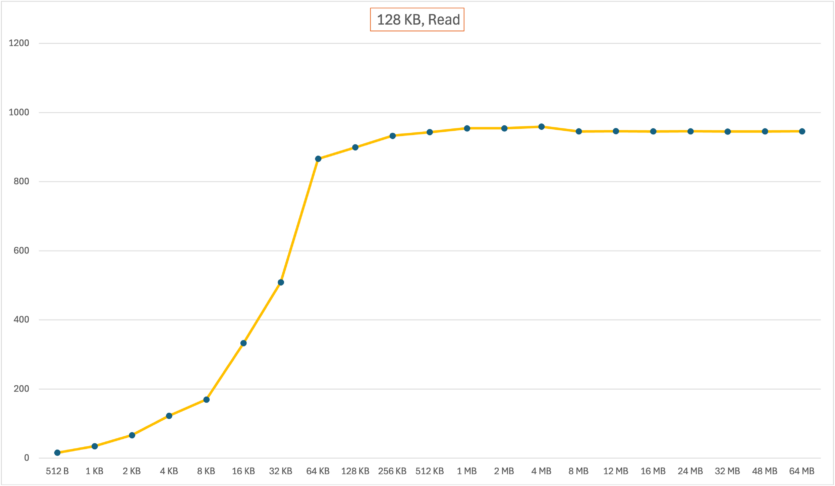

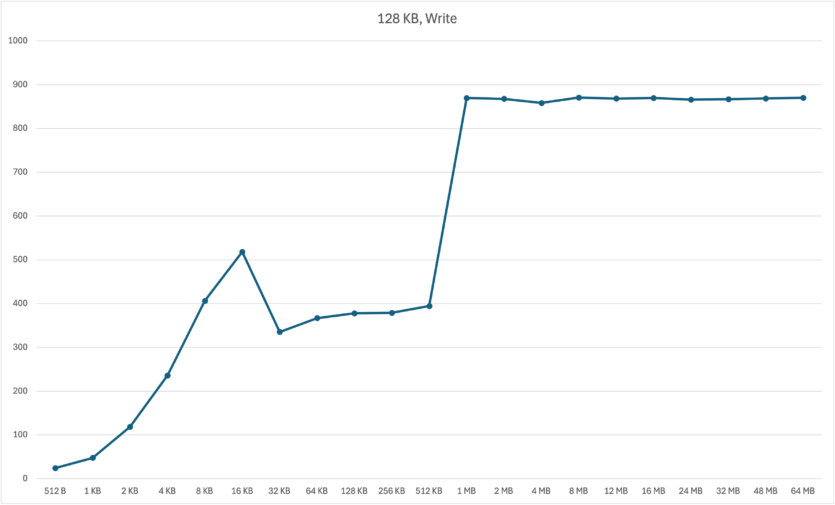

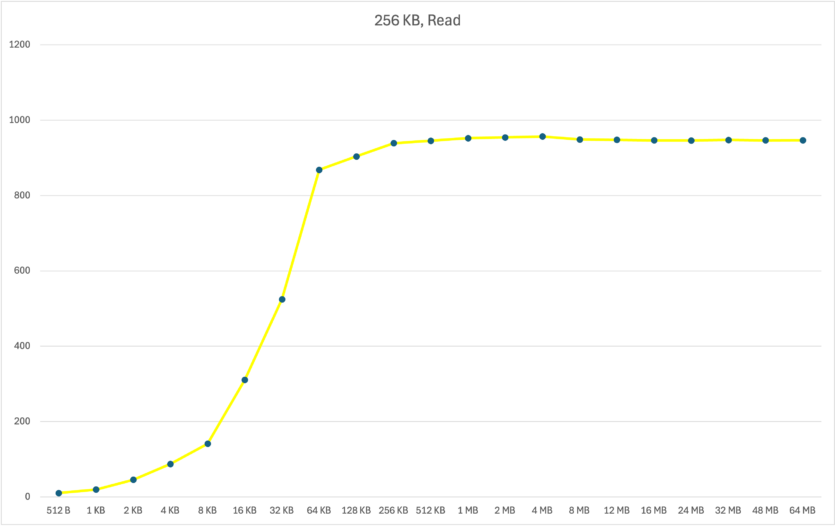

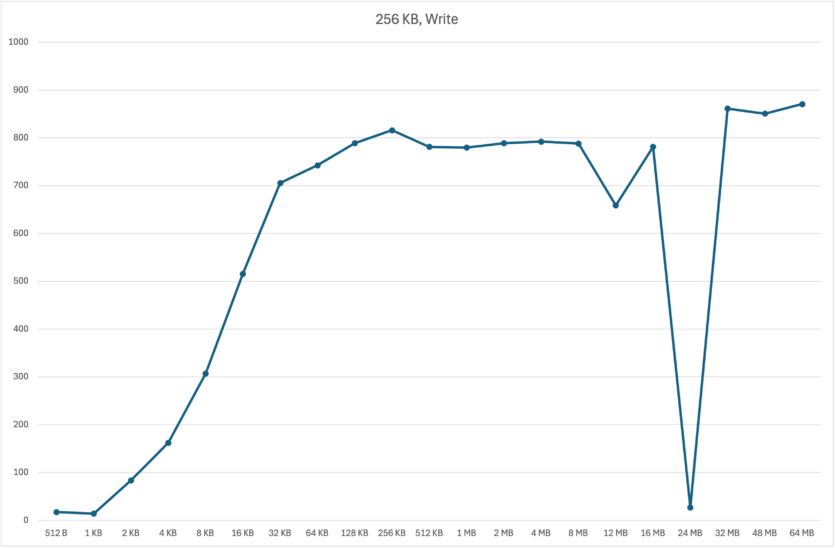

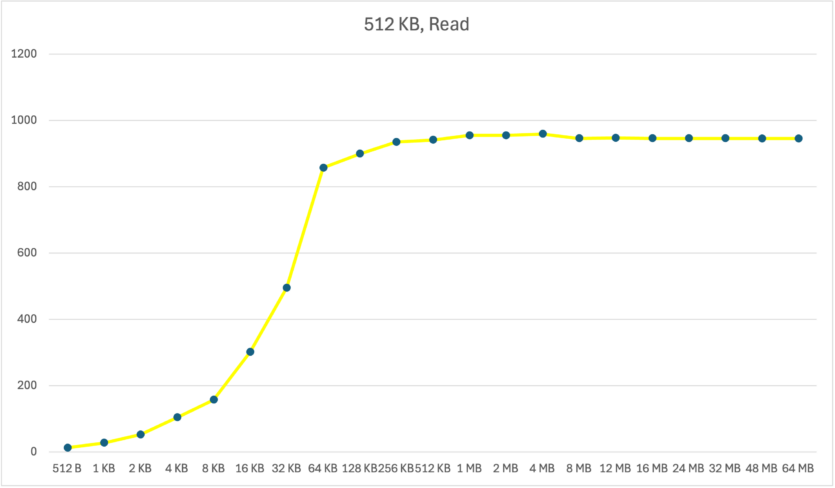

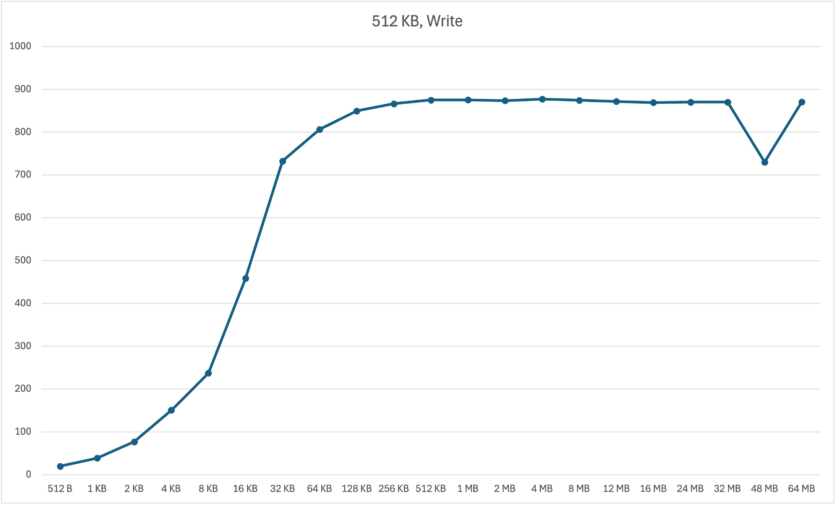

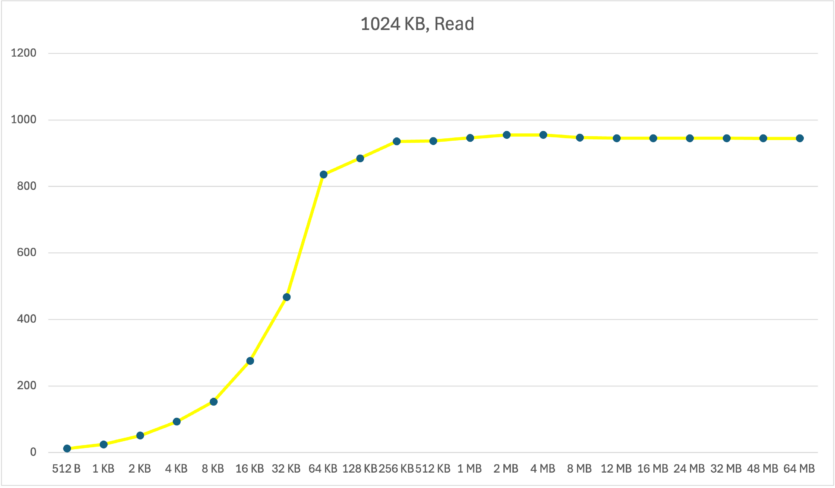

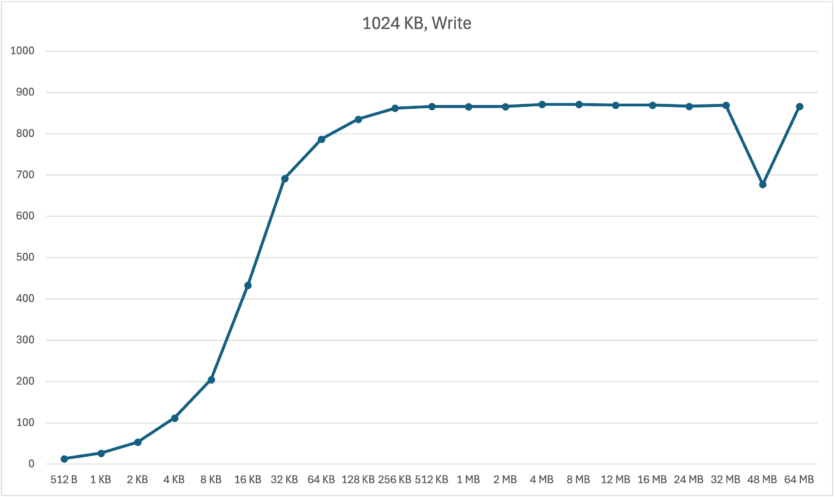

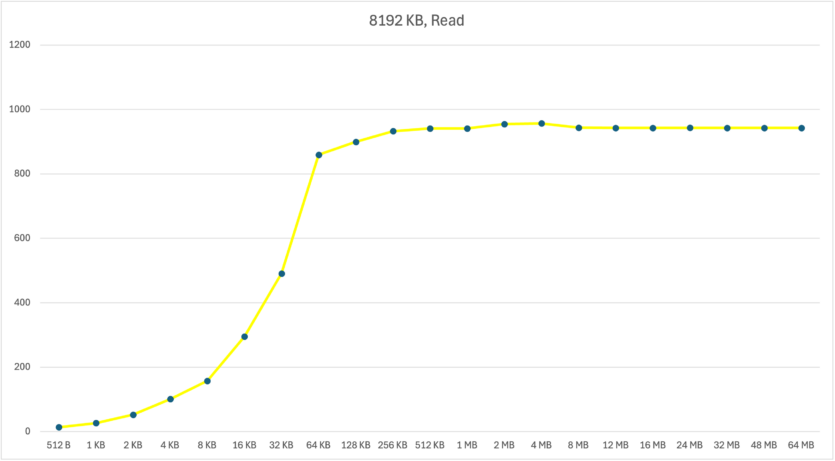

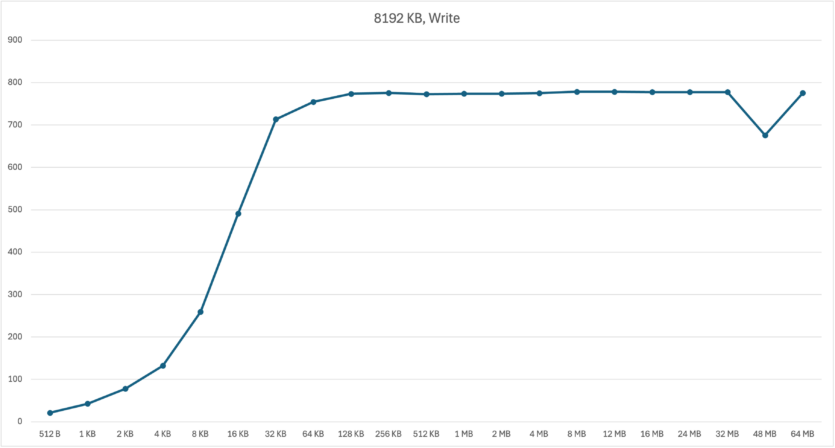

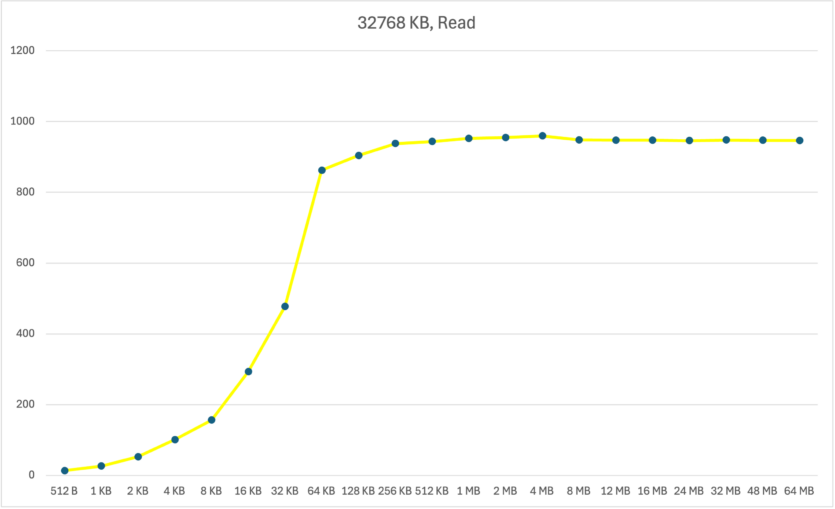

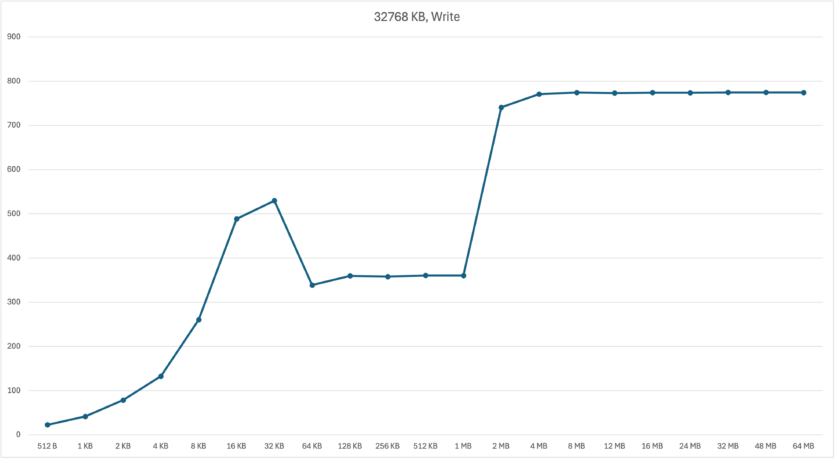

We’ll test it in the Atto Disk Benchmark application. In it, we specify the speed of reading and writing to the disk, taking into account different sizes of the I/O buffer. This way, we can see the potential difference in transferring small, medium, and large files. The base file size is set to 16 GB to simulate large game files or good quality movies/series.

Formatting the test SSD takes about one hour for each cluster size.

The test results are presented in the form of graphs. In some cases, abnormal performance drops were recorded. Their exact nature was not recorded, but even after a repeated test round, the results could either change or remain the same. For example, a 256 KB cluster record shows a sharp drop with a 24 MB buffer. When testing again, the result changed for the better, but there was a drop on similar buffers for the 128 KB and 32768 KB clusters. However, it is necessary to leave such a random drop down.

Let’s look at two common testing schedules. Let’s start with Reading Data. In general, the results on the graph for all clusters do not differ too much. Somewhere a little better, somewhere worse. The difference of up to 10 MB/s is hard to notice. But it is clear that 64 KB and 128 KB clusters are better suited for reading small files.

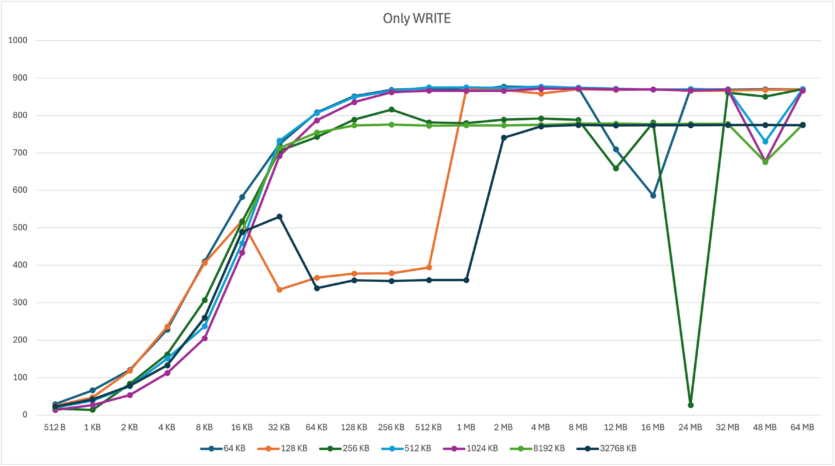

Let’s move on to the File Record. Here things are a bit more complicated, as some «players in the race» show a performance drop in a similar «section of the track». Namely, on the 64 KB buffer – 1 MB for the 32768 KB cluster, 32-512 KB in 128 KB and 256 KB. The performance decrease was also recorded by 48 MB for clusters of 512, 1024, 8192 KB. In this case, the test was repeated, and the drop was still there.

After testing, we can say that there is no clear favorite. Reading data did not turn out to be a problem for them, but there are — problems with writing. On the test SSD, the following clusters of 512, 1024, and 8192 KB showed consistently good results. Let’s take a closer look at them:

- The 8192 KB cluster shows slower speeds (by 100 MB/s) than its «smaller counterparts.

- The 1024 KB cluster shows slower speeds than the 512 KB cluster.

The only problem with the last three candidates is the one we mentioned earlier — small files will take up the entire volume. And the 64 KB and 128 KB clusters perform the best, especially for small files. Then there is the other problem mentioned above — high fragmentation reduces the reliability and long-term operation of the device.

It is clear that the winner for most customers will be a 256 KB cluster. Oddly enough, Windows sets this value by default. However, absolutely no one forbids setting a different size.

Without file systems, we would not be able to view photos, videos, play games, or communicate on our cell phones. Therefore, we must respect one of the main components of the modern digital world.

Spelling error report

The following text will be sent to our editors: