With the release of the new generation of RTX Blackwell, NVIDIA quietly and without much fanfare showed where graphics will go next. Not only for games but also for other areas. Today we continue the topic that was set in Previous articleand try to understand why we will need neural shaders and the latest technologies that we will see in a few years.

Content

The revolution continues

GPU performance and image quality are continuously improved through the use of Neural Rendering techniques. NVIDIA DLSS Super Resolution and Frame Generation technologies have significantly increased frame rates while maintaining the same image quality as classic rendering and generating the vast majority of pixels.

Similarly, DLSS Ray Reconstruction (RR) significantly reduces the number of rays that need to be calculated to create high-quality ray-traced scenes or determine their trajectory, using advanced artificial intelligence techniques to reduce noise and reconstruct missing details. Future artificial intelligence technologies will continue to increase and improve image quality with significantly lower computational and storage costs.

Blackwell’s Neural Rendering and Neural Shading technologies will accelerate developers’ use of AI in their applications, including the implementation and use of real-time rendering and modeling methods based on Generative AI.

Generative AI will help game developers dynamically create diverse landscapes, implement more realistic physical simulations, and generate more complex NPC behavior on the fly. Professional 3D modeling applications will be able to create design options faster than ever, based on specified input criteria. These and many other scenarios will be complemented by Blackwell’s RTX architecture and neural rendering capabilities.

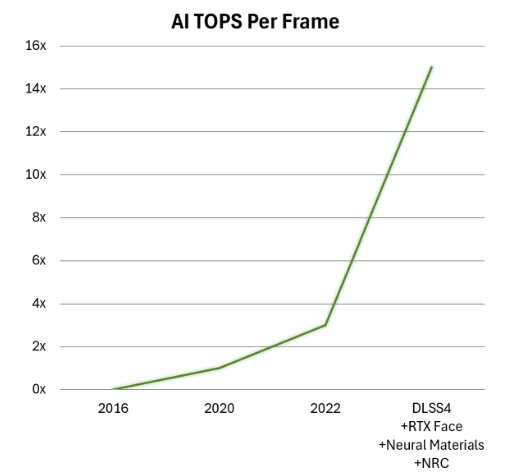

The graph shows that with each new generation of graphics cards, the role of AI in frame creation begins to grow. The Turing architecture laid the foundation for a new era in graphics, combining programmable shading, real-time ray tracing, and AI algorithms to deliver realistic and physically accurate graphics in games and professional applications.

The NVIDIA Ampere architecture updates SM units, improves RT and Tensor cores, introduces the first GDDR6X memory subsystem to the RTX 3090, enhances DLSS capabilities, and delivers huge overall performance gains.

The NVIDIA Ada architecture delivers even higher performance, lower power consumption, and visual fidelity for AI-powered ray tracing and neural graphics with the addition of new DLSS features such as frame generation and Ray Reconstruction.

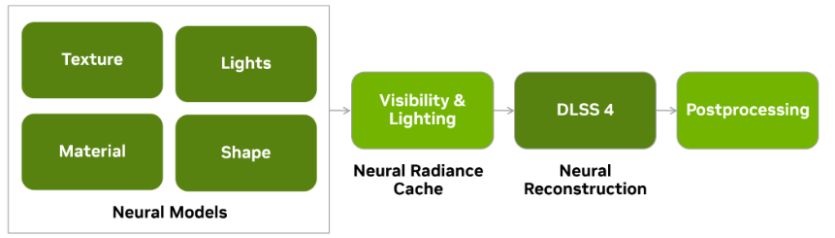

RTX Neural Materials, RTX Neural Faces, RTX Neural Radiance Cache (NRC) and the new Transformer model are more computationally efficient, but can reconstruct images of even better quality. As shown in the graphic above, the era of neural imaging has arrived.

Neural shaders (+ a brief history of shaders to them)

Let’s start with the basics. A shader is a program that runs on the GPU to control the rendering of graphics, the complexity of which varies depending on the visual effects and processing required. In their most basic form, shaders allow you to calculate the light, darkness, and colors used when rendering a scene in the game’s 3D space. This process is called Shading.

The evolution of shaders in GPUs is marked by significant progress in graphics programming and visualization capabilities. Here’s a brief overview of the main stages of graphic technology development:

- Conveyor with fixed function (Fixed-Function Pipeline, pre-2000s) – Graphics processed using a Fixed-Function Pipeline, where operations are defined and configured, but not programmable, and with limited control over rendering simple effects such as lighting and texturing.

- Vertex shaders (Vertex Shaders, DirectX 8.0 / OpenGL 1.4, early 2000s). Programmable Vertex Shaders gave developers access to vertex data, including transformations and lighting calculations, allowing them to create more complex effects.

- Fragment / Pixel shaders (Fragment / Pixel Shaders, DirectX 9.0 / OpenGL 2.0, early 2000s). Allowed developers to write special code for pixel-level operations, allowing for dynamic lighting and texturing, expanding the flexibility of visualization with Shader Model 2.0.

- Unified shader architecture (Unified Shader Architecture, DirectX 10.0 / OpenGL 3.3, 2006). Unification of geometry, vertex and fragment shaders, which ensures better resource utilization and high efficiency. Introduced Shader Model 4.0, which supported more advanced technologies and optimization.

- Geometric shaders (Geometry Shaders, DirectX 10 / OpenGL 3.2, 2006). Geometry shaders allowed you to create and manipulate primitives in the shader pipeline. New effects include Dynamic Tessellation and Particle System.

- Improved Tessellation and Computational Shaders (Compute Shaders, DirectX 11 / OpenGL 4.0, 2009). Reimagined geometry with higher surface detail and smoother curves in 3D models. Shader Model 5.0 adds more features for real-time rendering methods. Computational shaders have added support for Parallel processing and Complex Simulation.

- Primitive and mesh shaders (Primitive and Mesh Shaders, DirectX 12 Ultimate / Vulkan add-on, 2018-2020). Expanded the capabilities and performance of the geometry pipeline by combining the functions of the Vertex and Geometry shaders into a single shader. Mesh shaders allowed the GPU to process more complex algorithms, taking more work away from the CPU.

- RTX (NVIDIA Turing / DirectX Raytracing Architecture, 2018). Adds Real-Time Ray Tracing (RTX) capabilities directly to the SM units in the GPU, enabling realistic lighting, shadows, and reflections.

- Neural shaders (NVIDIA Blackwell Architecture, 2025) – Combining AI and traditional shaders. Artificial intelligence is built into the computational process of the traditional visualization pipeline, paving the way for full Neural shading WE ARE HERE!

As you can see, each new type of shader set the bar for realism in graphics. The new technologies of the time forced you to buy a new video card every generation, because more and more they were becoming like «real life». The eyes saw the progress, and the wallet opened.

With the launch of Blackwell, NVIDIA introduced the era of «Neural Shaders», the next evolutionary step in programmable shading. Instead of writing shader code, developers will train AI models to produce an approximation of the result they would get from using code. In the future, all games will use AI technology for visualization.

Until now, NVIDIA has been using neural shaders for DLSS using CUDA for tensor kernels. NVIDIA worked with Microsoft on the creation of a new Cooperative Vectors API. With it, you can access tensor kernels through any type of shader in a graphics application, which allows you to use many neural technologies.

Most likely, not only Microsoft was involved. I’m talking about AMD, who didn’t get the hint.

You can’t do it without DLSS (or its analogues).

You can’t do it without DLSS (or its analogues).

New technologies

If you’re too lazy to read, NVIDIA has offered two videos. The first one shows a beautifully rendered 3D demo of Zorah, and the second one describes the technologies described below.

What kind of screen ratio is that? 21:9?

RTX Neural Materials

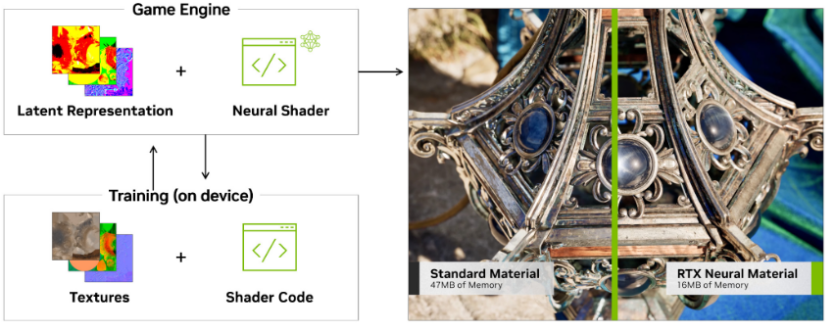

In big-budget CGI films, some materials can be very complex and consist of multiple optical layers. Ray tracing is a complex task in itself, and in real time it becomes very difficult. However, AI technologies replace the original mathematical model of the material with a neural approximation. This allows for a better representation of materials and, at the same time, enables cinema-quality playback at high frame rates.

RTX Neural Texture Compression (NTC)

More details are described in a special scientific article.

As advances in photorealistic visualization continue to grow, so does the amount of texture data required, placing additional demands on storage and memory, as well as impacting performance due to bandwidth constraints. RTX Neural Texture Compression uses neural networks to compress and decompress textures more efficiently. In the Zorah demo, the Neural Materials demo uses 1110 megabytes of memory for standard materials on the lantern and cloth. However, it uses only 333 megabytes for neural materials – a savings of over 3x with much better visual quality.

Neural Radiance Cache (NRC)

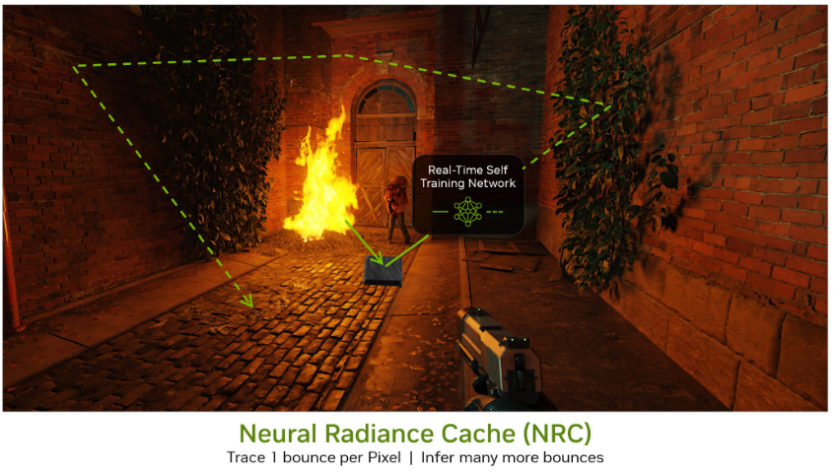

NRC uses a neural shader to cache and store approximate luminance information. This way, complex lighting information can be stored and applied to create high-quality global lighting and dynamic lighting effects in real time. The technology improves efficiency by reducing the computational burden on the GPU and provides improved image quality and scalability.

NRC receives the results of the beam after its first bounce and determines the final lighting values for subsequent reflections. It turns out that the initial set of rays is started, but not fully counted. Then, the values of the ray paths are sent to the cache after one bounce and the scene is modeled as if the ray were a full length with many reflections. And because the neural networks are trained during play, NRC is contextually aware of a variety of scenarios, allowing it to be customized to provide an accurate indirect lighting profile for each game scene.

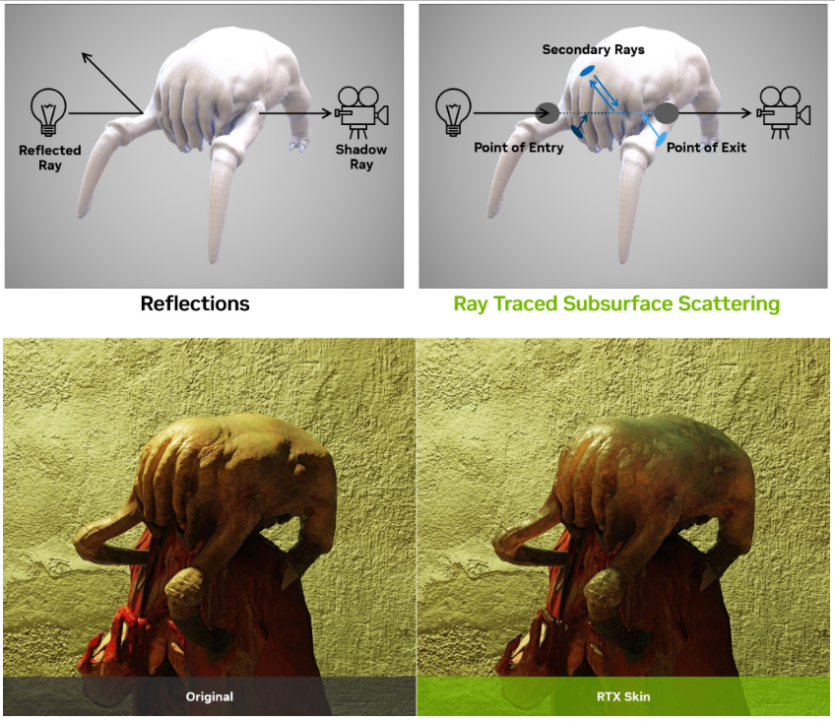

RTX Skin

Skin is one of the challenges for visualization, because in games it can be of different types. Essentially, skin — is a set of meshes/meshes that form the outer part of an object. This works well if the material is impervious to light (wood or metal), where the rays only need to calculate the light that is common to the scene. However, translucent materials work differently. Light penetrates the material and the object, then moves or scatters within the object, and then is emitted in other parts of the object. RTX Skin is the first example of subsurface scattering in ray-tracing games, and it can be used however the artist wishes.

RTX Neural Faces

Another challenge for real-time visualization was the realistic rendering of faces. RTX Neural Faces offers an innovative new approach to improving face quality using generative AI.

Neural Faces takes a simple rasterized face and 3D pose data as input and uses a real-time generative AI model to create a more natural-looking face. It is then trained based on thousands of offline generated images of this face from any angle, under different lighting conditions, emotions, and occlusion.

The training pipeline can use real photos or AI-generated images with variants created using a diffusion model. The trained model is then optimized by TensorRT for real-time face application. RTX Neural Faces is the first step towards real-time graphics viewing using generative artificial intelligence.

Timing is 2:20. So far, it doesn’t look good. It stands out too much from the overall picture.

Animations from live actors and MetaHuman are still a long way off.

As we could see, all these technologies and the next ones for future generations of graphics cards will give a high performance boost to neural rendering, and it will become the main trump card» over the main competitor — AMD.

What about AMD?

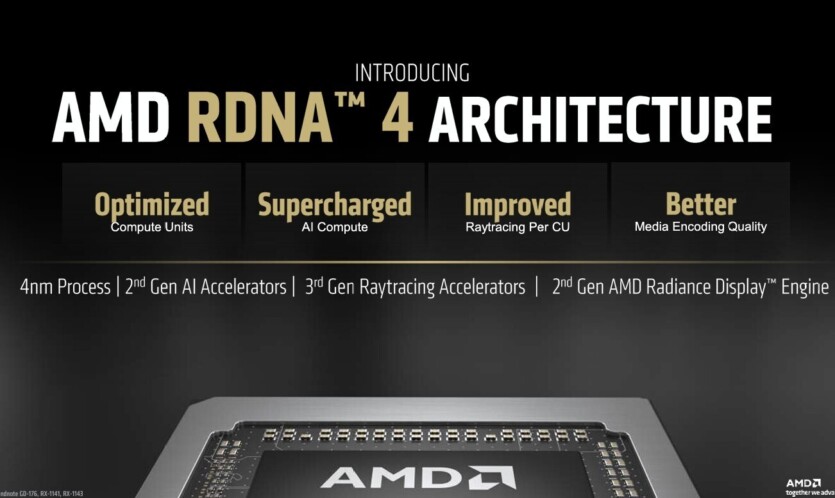

On New RDNA 4 architecture video cards announced at CES 2025 — RX 9070 and RX 9070 XT. One of the features of the new generation is the use of AI computing. This is actually a very good thing. More and more software developers will optimize their applications for AMD graphics cards. But this is not certain.

What is certain is that RDNA 4 will provide better RT support, a new upscaler, and a frame generator. This will be called AMD FidelityFX Super Resolution 4. Simply put — FSR 4. Of course, the online community revolted, and it’s clear why: FSR 4 will not support old graphics cards. I wonder what is meant by «old graphics cards»?

Let’s start with the simple one. NVIDIA has been producing graphics cards with RT hardware support since 2018. 7 years have passed, and the fourth RT generation has already appeared. There is a high probability of FSR 4 support on NVIDIA graphics cards.

But with GPUs and APUs from AMD, the situation is somewhat ambiguous. For example, the Z1/Z1 Extreme doesn’t have RT hardware support, as these are specially limited versions of laptop processors without AI. The Z2 generation (three different processors from different generations) is also a big question mark. Although, I hope that they will be with FSR 4.

Let’s move on to video cards. Back in the RX 6000 series, there were the first RT cores. Although they were not called that, some analogs were already laid down (Ray and AI Accelerators). And this is 2020. That is, the first AI implementation activities have already begun since then (5 years ago!). If FSR 4 is made exclusive to RX 9000, it will be not entirely clear why.

PS6 consoles and the new Xbox are not clear when they will appear. If it is two years from now, then a new generation of AMD graphics cards will be released and we will get FSR 5 or a new name for them.

In general, although RDNA 4 was announced at CES 2025, there is still not enough information about it. We are looking forward to a full-fledged presentation, not 4-5 slides.

Spelling error report

The following text will be sent to our editors: