Last week, a Chinese AI lab released a new “open” AI model called DeepSeek, which outperforms many competitors in popular tests (including programming and essay writing), but it has one interesting feature—it thinks it’s ChatGPT.

Posts with similar claims about DeepSeek spread on X and Reddit. The model also specifies which release it belongs to—version GPT-4, released in 2023.

This actually reproduces as of today. In 5 out of 8 generations, DeepSeekV3 claims to be ChatGPT (v4), while claiming to be DeepSeekV3 only 3 times.

Gives you a rough idea of some of their training data distribution. https://t.co/Zk1KUppBQM pic.twitter.com/ptIByn0lcv

— Lucas Beyer (bl16) (@giffmana) December 27, 2024

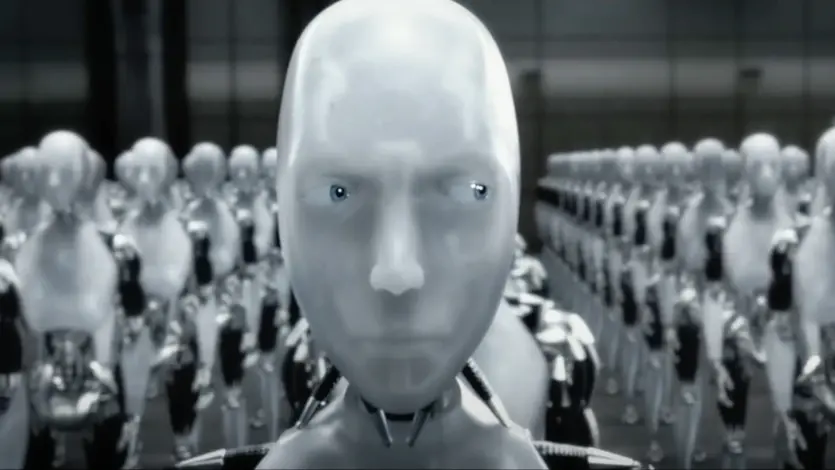

Why is this happening? TechCrunch writes that both models—ChatGPT and DeepSeek V3—are static systems that learn from billions of examples and patterns to make text predictions (even in such elementary things as determining where to place a comma in a sentence). The Chinese have not disclosed their model’s training data, but there are plenty of publicly available text datasets created by GPT-4 through ChatGPT—so it’s quite possible that DeepSeek used them and is just reproducing the output literally.

“Obviously, at some point the model receives raw responses from ChatGPT, but it’s unclear exactly where from,” said Mike Cook, a research fellow at King’s College London, specializing in AI, in comments to the publication. “It could be ‘random’… but unfortunately, we’ve already seen cases where people trained their models on other models’ data to try to leverage that knowledge.”

Cook noted that the practice of training models on the results of competitive AI systems can be “very bad” for the quality of the model, as it can lead to hallucinations and misleading responses, like the ones mentioned above.

“Like a photocopy, we lose more and more information and connection to reality,” Cook adds.

This could also contradict the terms of service of these systems. OpenAI’s rules, for example, prohibit ChatGPT users from using the results to develop models that compete with OpenAI’s own systems.

Neither of the companies responded to journalists’ inquiries, but the boss of the startup Sam Altman wrote on those same days when posts about DeepSeek spread, stating:

“It’s relatively easy to copy what you know already works. It’s extremely hard to do something new and risky when you don’t know what the outcome will be.”

In fact, DeepSeek V3 is far from the first model that mistook its identity. Google Gemini responded to a Chinese language query that it was the chatbot Wenxinyiyan from the Chinese company Baidu.

Spelling error report

The following text will be sent to our editors: