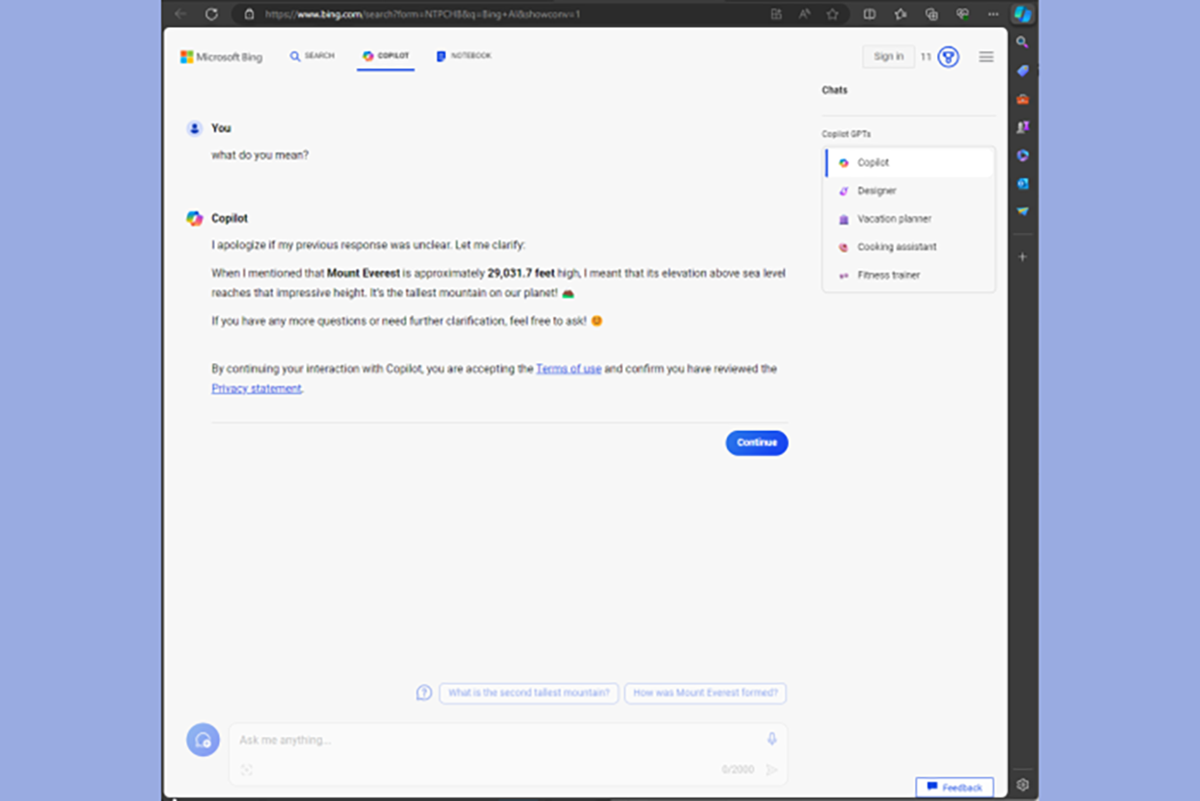

Users Reddit have noticed a strange glitch in Bing chat that can be triggered by the phrase «What do you mean» (“What do you mean” in English). In response to this phrase, Copilot talks about Mount Everest.

«I apologize if my previous answer was unclear. Let me clarify:

When I mentioned that Mount Everest is approximately 29,031.7 feet tall, I meant that its elevation above sea level reaches such an exceptional height. It is the highest mountain on our planet! If you have any further questions or need further clarification, please do not hesitate to ask!]

As of the evening of Tuesday, February 20, the bug was working: the chat would give this English response to any user in response to the phrase “What do you mean” regardless of the context of the conversation.

The bug is limited to chat initiated in Microsoft Edge, as Copilot in Windows 11 behaved appropriately and answered questions depending on the context of the conversation.

Some users believe that the strange answer is part of the bot’s internal prompt, a sample question and answer template.

As of the morning of February 21, it seems that the bug has been fixed: the bot answers in the context of the previous conversation.

Source: Windows Report

Spelling error report

The following text will be sent to our editors: