Recently, IT companies have been paying more and more attention to AI models capable of logical reasoning and solving problems with complex dependencies NVIDIA has also taken a step in this direction by presenting a series of OpenReasoning-Nemotron models designed to work with math, physics, computer science, and programming problems. Most importantly, they don’t require expensive servers or access to the cloud. They can be run locally — on a gaming computer with a powerful video card.

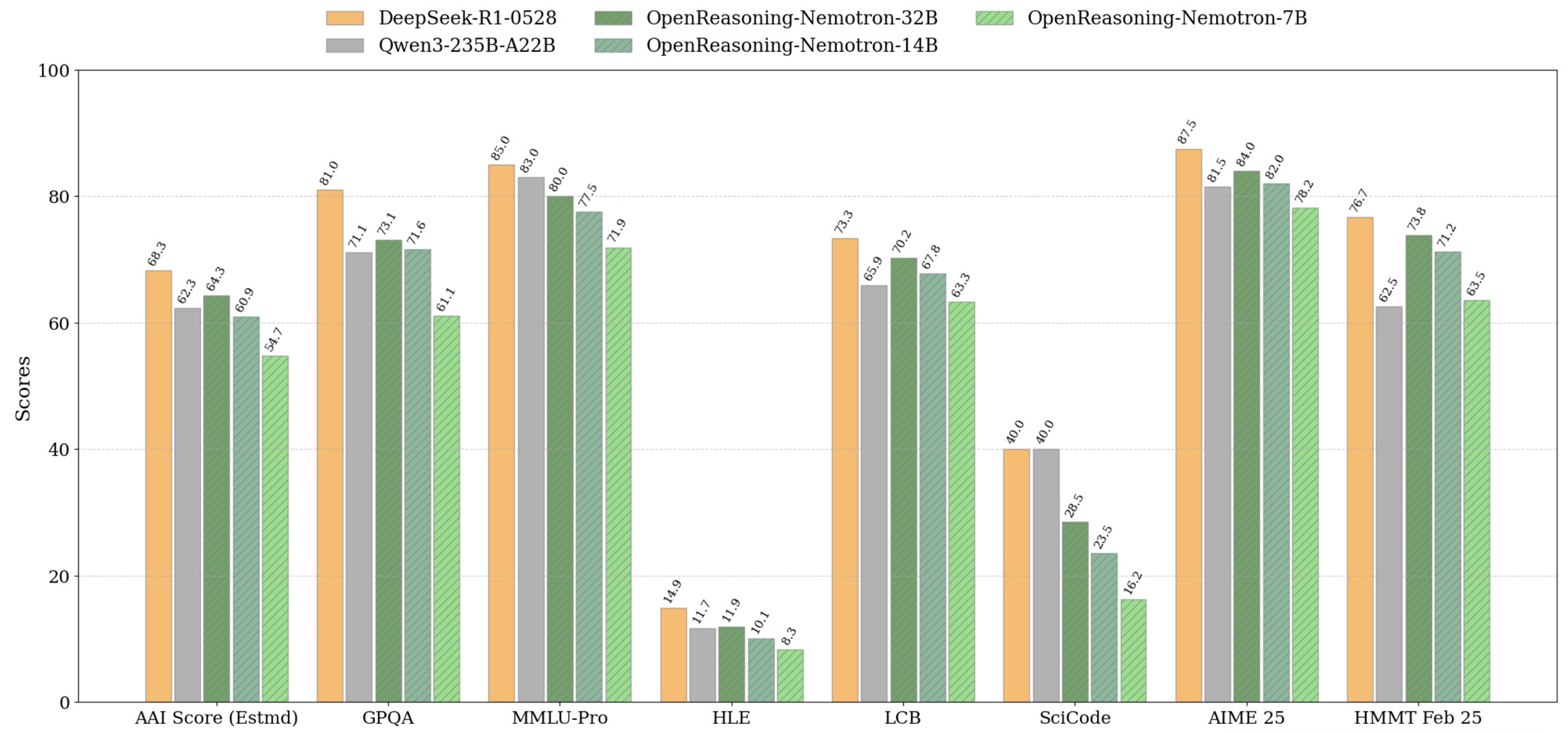

NVIDIA has introduced four variants of the OpenReasoning-Nemotron model with different number of parameters: 1.5 billion, 7 billion, 14 billion, and 32 billion. All of them are based on a much larger model DeepSeek R1 0528, which has 671 billion parameters. The company used a distillation technique — shrinking the huge «teacher» model to smaller learners built on top of Qwen-2.5. Thus, sophisticated computational and analytical capabilities became available not only to researchers but also to enthusiasts.

The models are trained on the basis of 5 million examples of solutions from math, science, and program code. This data was generated through a special NVIDIA NeMo Skills tool. All four versions of OpenReasoning-Nemotron were then trained in a supervised manner, without the use of reinforcement learning methods.

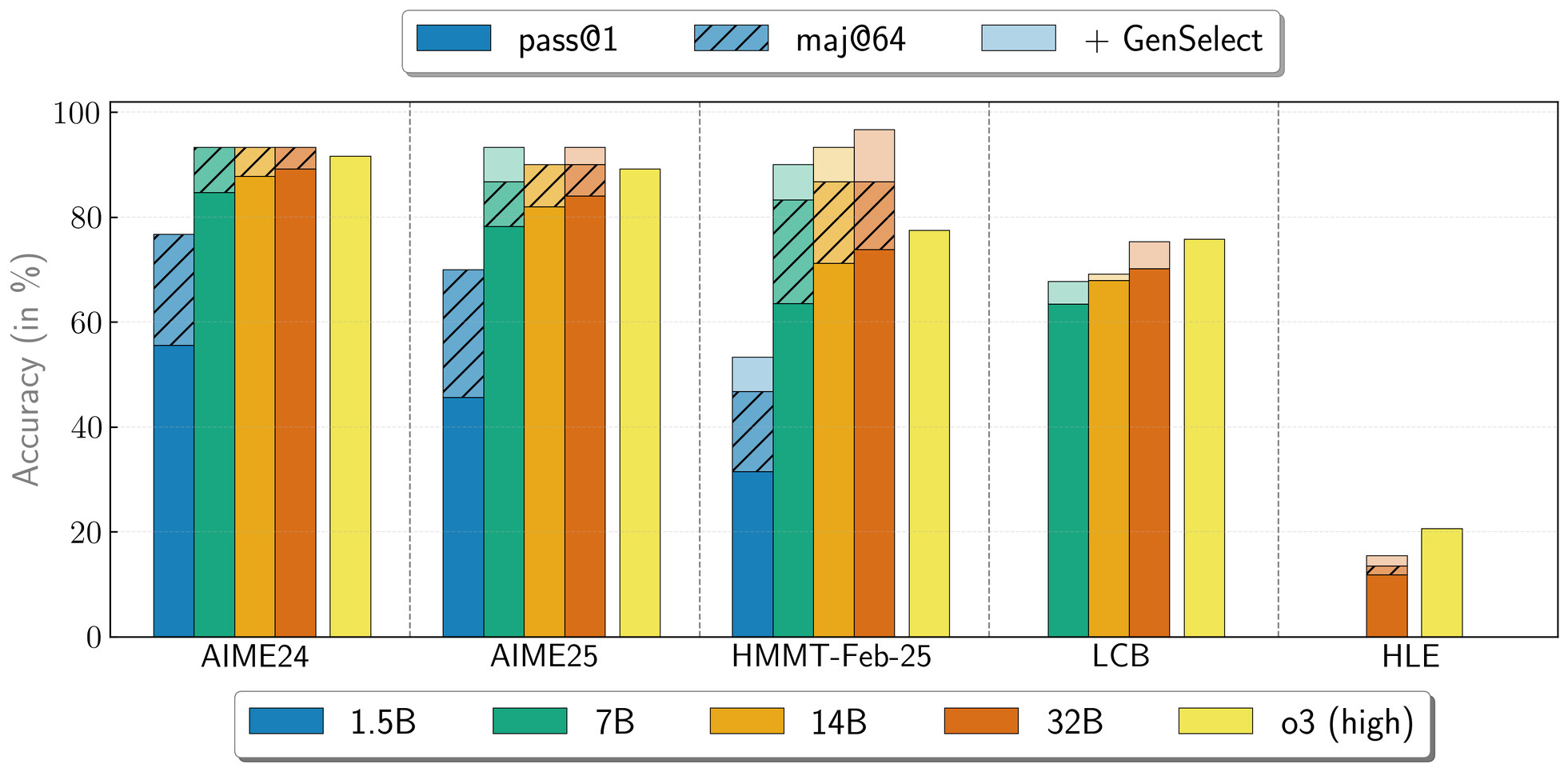

The results were quite optimistic. The most powerful of the models, the 32B, scored 89.2 points in the AIME24 math test and 73.8 — in the February HMMT competition. But even the smallest, 1.5B, is not lagging behind: 55.5 points on AIME24 and 31.5 — on HMMT.

NVIDIA sees these models as a convenient and effective research tool. All OpenReasoning-Nemotron variants will be available for download on the Hugging Face platform. They can be used as a basis for reinforcement learning, as well as adapted for your own tasks. An additional GenSelect mode allows you to run multiple parallel answers and select the best one — thanks to this, the performance of the 32B model can approach or even exceed the OpenAI o3-high level on some math and programming tests.

Thanks to a transparent training process without RL algorithms (i.e., without self-training the model through feedback), researchers get a «pure» and modern starting point for further experiments. And for enthusiasts and users who have a powerful video card at home, it opens up the possibility of running really smart AI models locally.

Source: techpowerup, huggingface

Spelling error report

The following text will be sent to our editors: