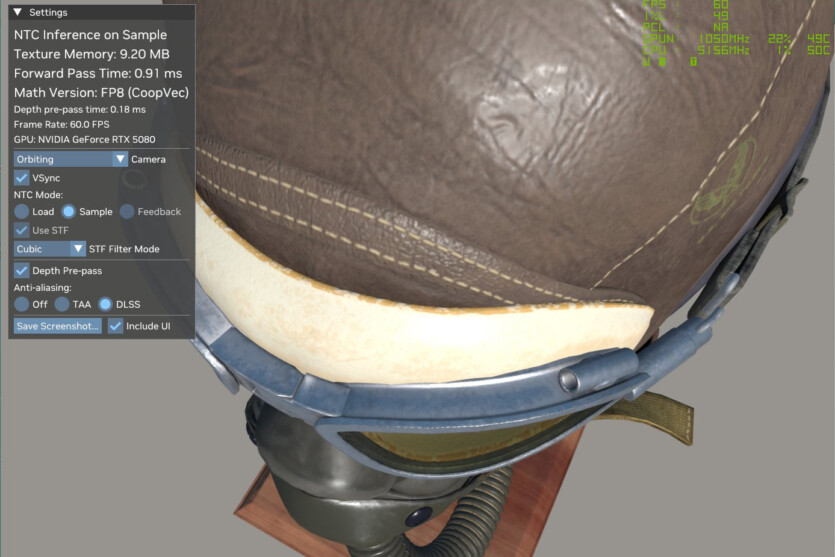

An enthusiast tested NVIDIA’s Neural Texture Compression in combination with Microsoft DirectX Raytracing 1.2 — the result is fantastic.

X user @opinali managed to test neural texture compressionwith amazing results in terms of performance and VRAM savings (Osvaldo’s original thread has a lot of technical details). In rendering scenarios, he noted a significant drop in video memory usage with NTC in combination with DirectX Raytracing (DXR) 1.2. When Cooperative Vectors and NTC were enabled simultaneously in «Default» mode, the texture rendered at 2350 fps, compared to 1030 fps when they were completely disabled, a difference of almost 80%. Video memory usage also differed significantly.

First look at NVIDA’s Neural Texture Compression with DXR1.2 Cooperative Vector!

First , this needs a preview driver (590.26), I installed that so you don’t have too-and it corrupted the screen, only after a few hard-resets it decided to work.😅🧵https://t.co/szgX1jVtcY

— Osvaldo Pinali Doederlein (@opinali) July 15, 2025

Neural Compression uses neural networks to compress and decompress game textures, which reduces the size with minimal impact on quality. In addition, one of the key features of the Microsoft DXR 1.2 update is the inclusion of cooperative vectors, which allows GPU shaders to work together on operations with small matrices or vectors. The combination of NTCs over cooperative vectors forms a compression/decompression mechanism that works efficiently through DX12, which reduces video memory usage.

NTC currently only works on NVIDIA graphics cards, as alternatives from Intel and AMD do not yet have neural rendering tools. NTC mode is available with the latest 590.26 Preview drivers, which also enable NVIDIA Smooth Motion technology on RTX 40. If you combine these modes with each other and integrate them into games, you can get a real performance boost. To recap, AMD introduces new procedural tree generation, which also minimizes memory usage.

Source: Wccftech

Spelling error report

The following text will be sent to our editors: