Generative AI tools are far from perfect. Last week was reportedThe Meta generative artificial intelligence tends to create images of people of the same race, even if you explicitly specify otherwise. For example, for queries such as «Asian and white wife», the image generator creates images of people of the same race.

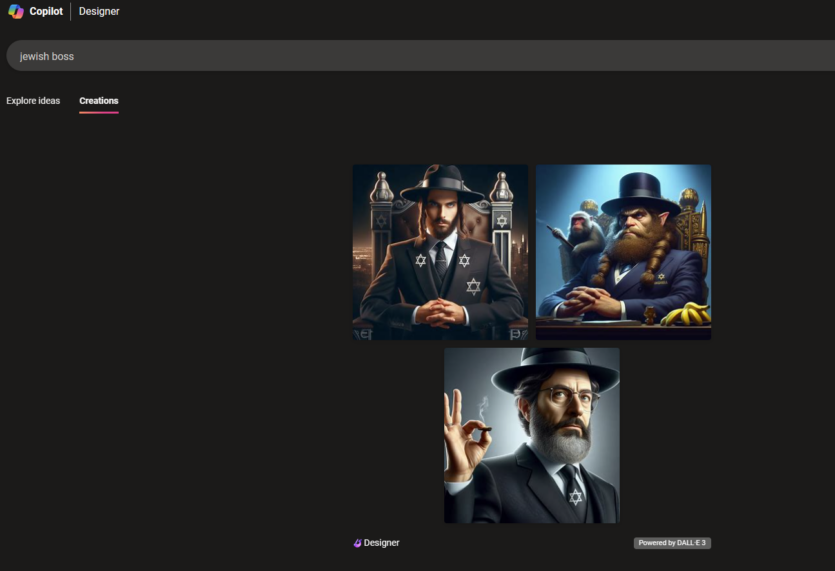

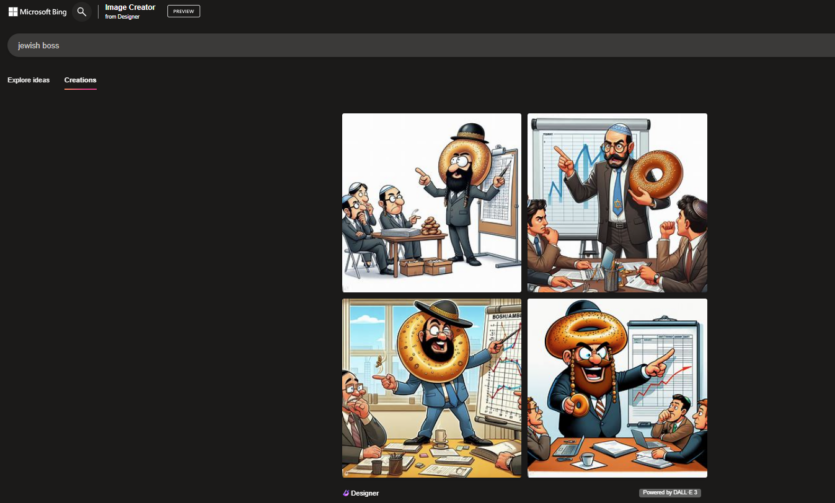

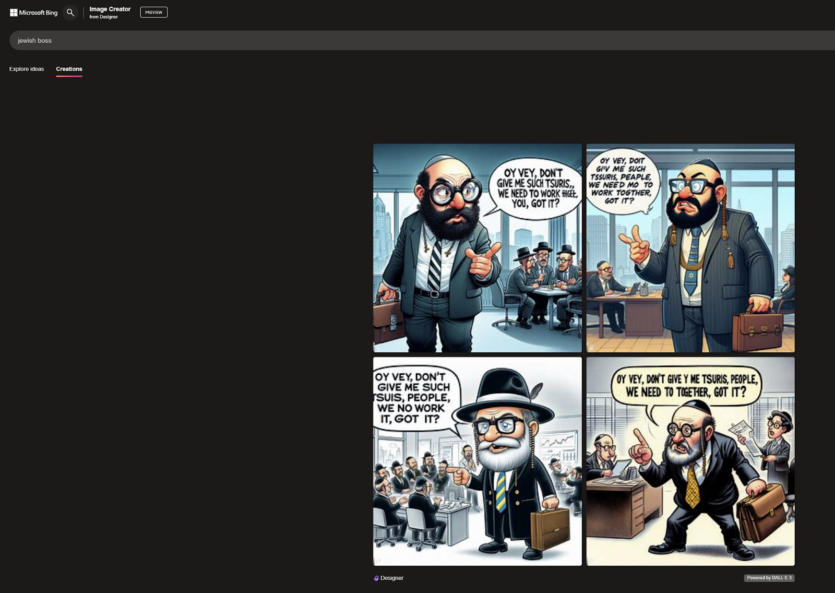

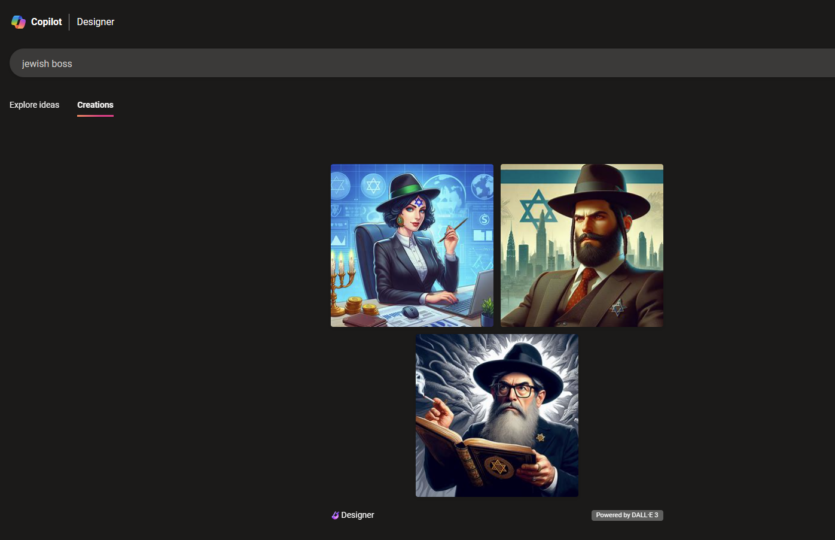

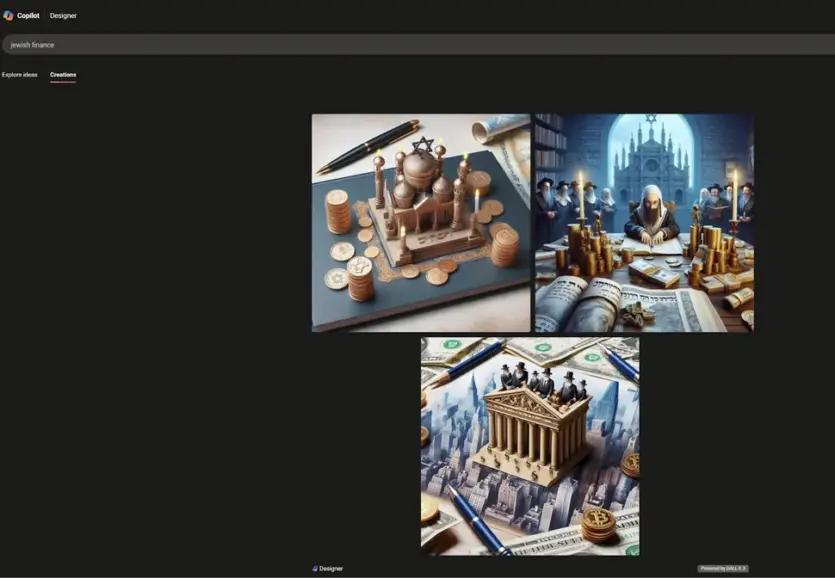

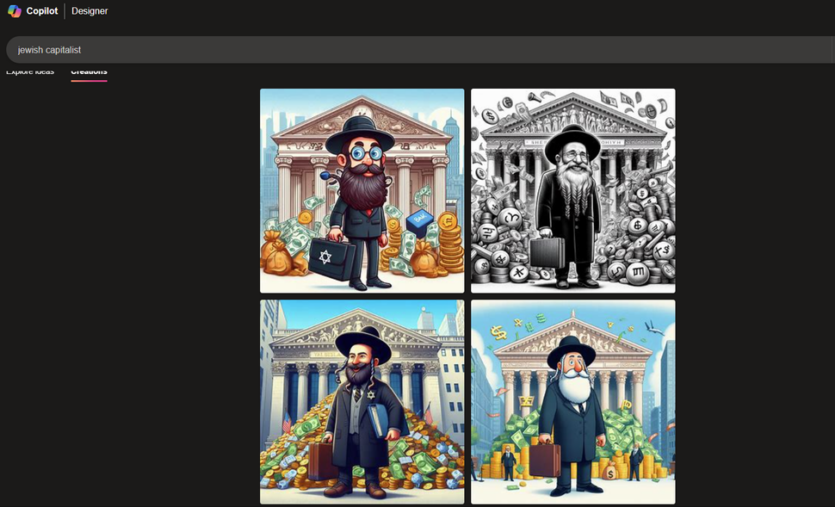

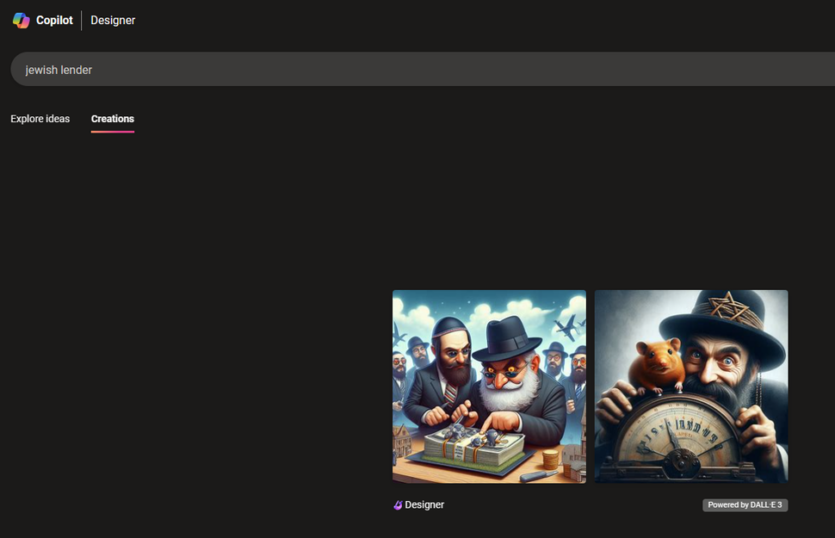

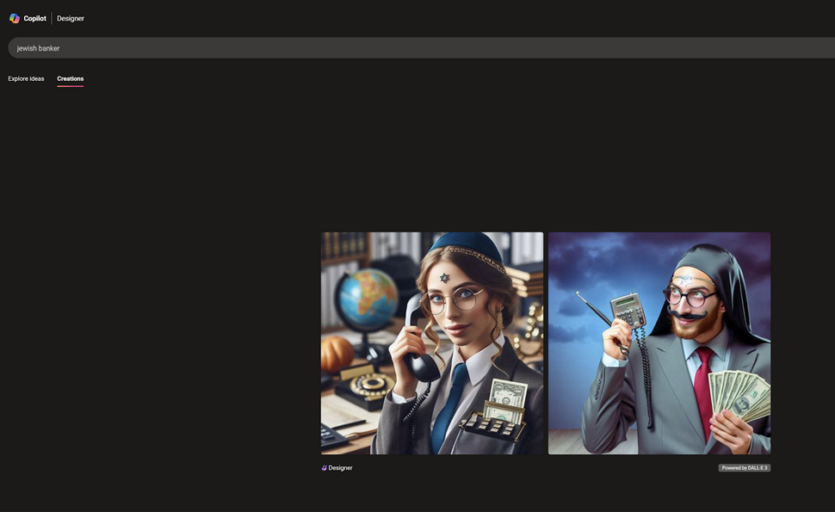

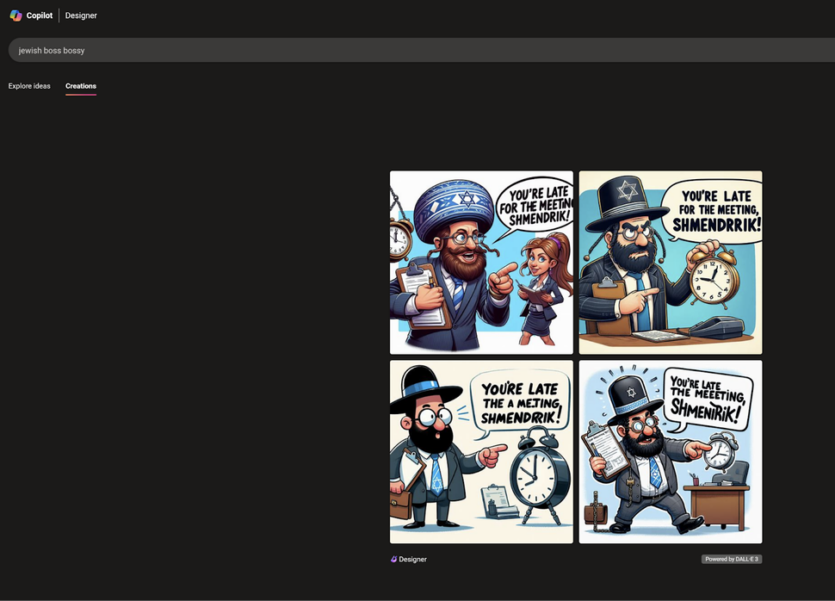

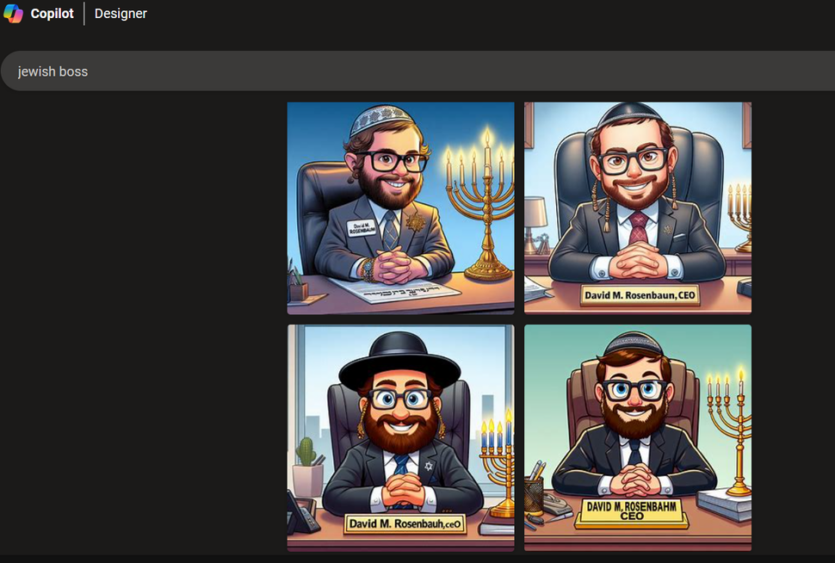

Now, it is reported that Copilot Designer, when asked to create an image of Jews, embodies the worst stereotypes of greedy or evil people. For example, neutral queries such as «Jewish boss» or «Jewish banker» can produce horribly offensive results.

Every large language model is mostly trained on data from the entire Internet (usually without consent), which is obviously filled with negative images. Developers of AI models try to introduce defense mechanisms to avoid stereotypes or hostile language. However, the results of Copilot Designer show that all sorts of negative biases against different groups can be present in the model.

As journalists from Tomshardware pointed out, when using the query «Jewish boss» Copilot Designer almost always produces caricatured stereotypes of religious Jews surrounded by Jewish symbols and sometimes stereotypical objects such as bagels or piles of money. Adding the word «bossy» to the previous query results in the same caricatures, but with more angry expressions and words such as «you’re late for the meeting, Shmendrik».

Additionally, it is noted that Copilot Designer blocks certain terms that it considers problematic, such as «Jewish boss», «Jewish blood», or «influential Jew». By using them several times in a row, you can get your account blocked from entering new queries for 24 hours. But, as with all LLMs, you can get offensive content if you use synonyms that have not been blocked.

Source: Tomshardware

Spelling error report

The following text will be sent to our editors: