Startup d-Matrix has announced development of a new type of memory for AI in the form of a silicon 3D chip, that will be 10 times faster than traditional HBM.

It is noted, that the 3D digital in-memory computing (3DIMC) technology, developed by d-Matrix, is designed to significantly speed up data output artificial intelligence systems. Currently, high-bandwidth HBM memory remains an integral part of AI systems and high-performance computing.

HBM stacks memory modules on top of each other for more efficient connecting memory chips and accessing higher performance. However, using this type of memory may not be the best option for computing-related tasks, where it plays a key role in the training of AI systems. It does not offer the fastest data output.

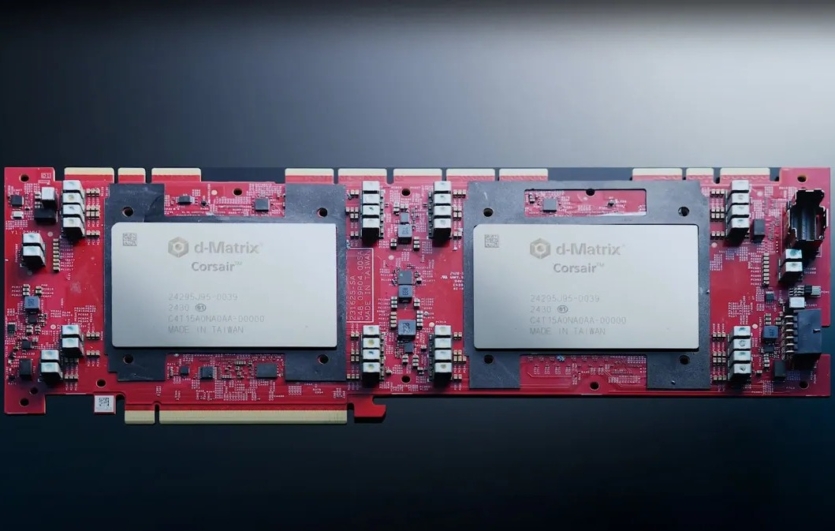

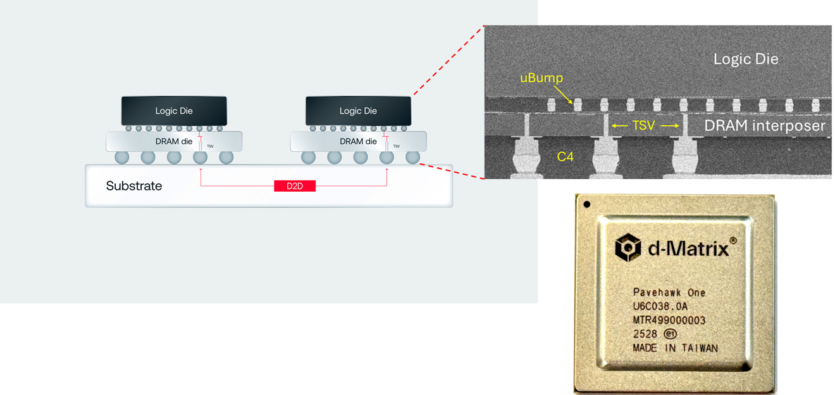

In this regard, d-Matrix is testing the Pavehawk 3DIMC silicon chip in the laboratory. Hardware for digital computing in RAM currently consists of LPDDR5 memory chips with DIMC chips, installed on top of them, connected via interpolator —an intermediate layer in semiconductors that connects chips and a substrate or board.

This configuration allows the DIMC hardware to perform calculations directly in memory The DIMCs are configured to multiply a matrix by a vector — a common computational method, used in transducer-based artificial intelligence models.

“We believe, that the future of AI data output depends on rethinking not only computing, but also memory itself. AI output is limited by memory, not just the number of floating point operations (FLOPs). Models are evolving rapidly, and traditional HBM memory systems are becoming very expensive, power-hungry, and have limited bandwidth. 3DIMC is a game changer. By placing memory in three dimensions and integrating it more closely with computing systems, we significantly reduce latency, increase throughput, and open up new opportunities for efficiency“, — says the founder and CEO of d-Matrix Sid Sheth.

The company is currently working on the Pavehawk 3DIMC in the lab and is already expecting a new generation of Raptor memory. As noted, in the d-Matrix, the Raptor generation will also be based on the chiplet model.

The startup expects, that Raptor will speed up AI data output by 10 times compared to HBM and consume 90% less power. The HBM alternative is also attractive from a financial point of view. HBM is produced by only a few global companies, including SK hynix, Samsung, and Micron. Prices for this memory remain high. SK hynix recently estimated, that the HBM market will grow by 30% annually until 2030, and prices are rising in line with demand. An alternative to this giant may be attractive to cost-conscious AI buyers, although memory dedicated exclusively to specific workflows and computations may seem too shortsighted to potential customers who fear a bubble.

Source: Tom’sHardware

Spelling error report

The following text will be sent to our editors: