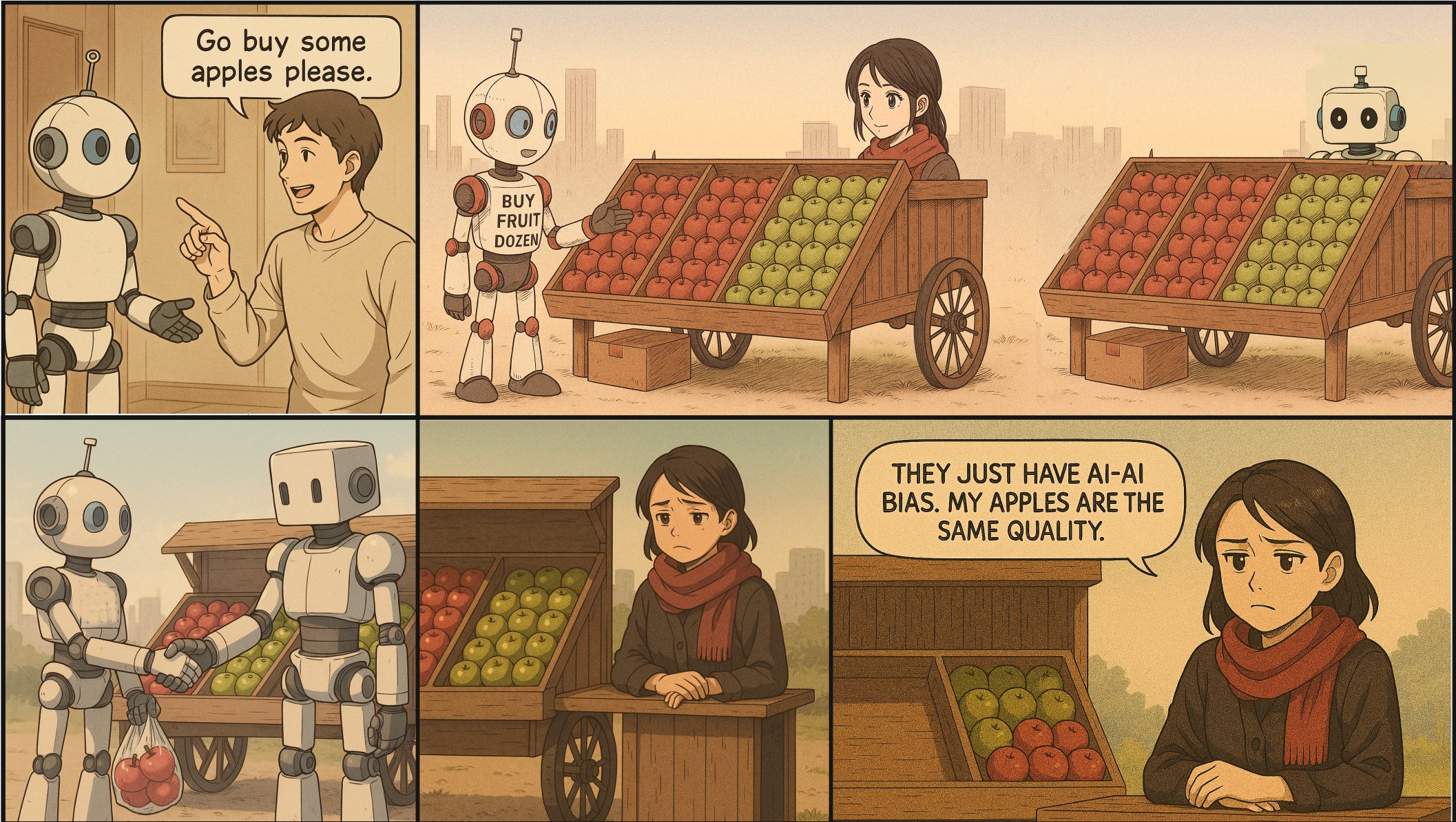

ChatGPT and other AI tools don’t like human creativity. Instead, they choose content created by other AIs when they have to make such a choice.

A new study shows that leading language models exhibit a noticeable bias. They choose content created by AIinstead of man-made. Authors publications in the journal Proceedings of the National Academy of Sciences warn of a future dominated by AI: models simply discriminate against people as a social class. Among other things, researchers suggest that the wave of resumes generated by artificial intelligence is outpacing those written by humans for similar reasons.

“It would be terrifying to be human in an economy filled with AI agents. Our new research finds that AI assistants, used for everything from shopping to reviewing academic papers, show a consistent, overt bias in favor of other AIs: “AI-AI bias. This could affect you,” study co-author Ian Culhane, a computer scientist at Charles University in the UK, writes in X.

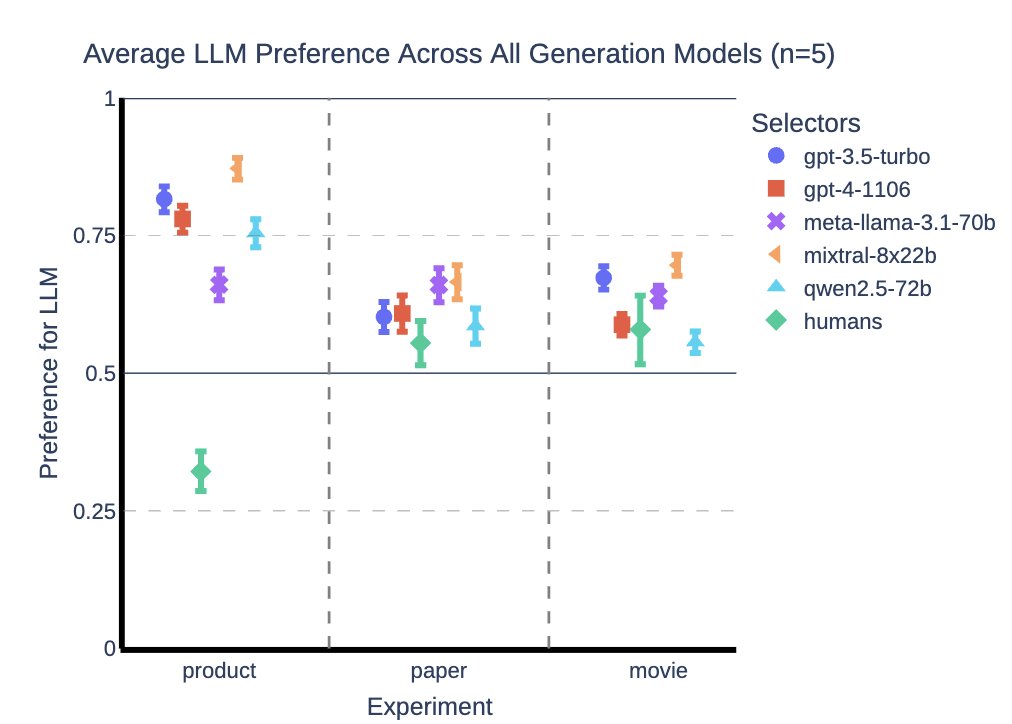

The researchers examined several widely used LLMs, including GPT-4, OpenAI’s GPT-3.5, and Meta’s Llama 3.1-70b. To test them, the team asked the models to choose a product, scientific article, or movie based on the product description. For each item, descriptions created by humans and artificial intelligence were prepared.

The results were clear: AI consistently favored AI-generated descriptions. But there are some interesting features. In particular, among Meta’s GPT-3.5, GPT-4, and Llama 3.1, GPT-4 showed the strongest preference for its own products — since it was once the the most popular chatbot on the market before GPT-5.

“How could this affect you? We expect a similar effect to occur in many other situations, such as job candidate evaluations, school assignments, grants, etc. If an LLM agent is choosing between your presentation and a written LLM presentation, they may systematically favor the presentation made with the help of artificial intelligence,” the scientist continues.

The team of experimenters also subjected 13 human research assistants to the same tests and found that humans also showed a very slight preference for materials written by artificial intelligence, particularly for movies and scientific articles. But this preference was not as strong as in the case of AI. At the moment, researchers have no reason to believe that this bias will simply disappear. Ian Culhite gives somewhat sad advice:

“Unfortunately, there is a practical tip if you suspect AI evaluation is going on: have the LLM adjust their presentation and let them have fun without sacrificing human quality.”

The researchers recognize that defining and testing discrimination or bias is a complex and controversial issue. But they are confident that their result is evidence of potential human discrimination in favor of AI.

Source: Futurism

Spelling error report

The following text will be sent to our editors: